Zapata Generates High-Resolution Images for the First Time Using a Quantum Computer

AI-generated text and images have been all over the internet this summer. For one, you have GPT-3, created by OpenAI, which generates text (or academic papers) based on prompts from the user. Then there’s DALL·E and the more popular but slimmed down DALL·E Mini, a derivative of GPT-3, which generates images based on text prompts. The pictures can be very weird — but are generally true to the prompt.

DALL·E and GPT-3 are generative models, a sub-field of machine learning where the goal is to learn from existing data and generate similar (but novel) data. Another well-known generative model, This Person Does Not Exist, uses the StyleGAN algorithm from our partners at NVIDIA to generate realistic images of faces.

But generative models aren’t just good for creating AI art and synthetic faces. They have many enterprise use cases as well. Take Google’s chatbot LaMDA, for example, which was apparently so convincing in conversations that a software engineer was recently fired for claiming the bot was sentient. Generative models can also be used to create new molecules for drugs, industrial chemicals and materials, and to generate synthetic data to augment datasets where data is sparse or expensive to collect.

As an Enterprise Quantum company, we’re interested in exploring how quantum computing can enhance generative models like these. While classical generative models already deliver impressive performance, they do so at an immense computational cost. We believe that quantum computers have the potential to deliver comparable if not better performance using significantly less computational resources than classical computers can deliver on their own.

Last year, we made a promising step forward, and now, we’re proud to share that our research has been published in Physical Review X.

Generating High-Resolution Handwritten Digits Using a Quantum Computer

In our research, we leveraged a family of classical algorithms called Generative Adversarial Networks (GANs) — the same type used by This Person Does Not Exist to generate realistic pictures of faces. However, our research was the first demonstration of a GAN that can generate high-resolution images using a component running on quantum hardware.

In our case, we trained our hybrid quantum-classical GAN on the MNIST database, which contains images of handwritten digits, to generate new images of handwritten digits. The MNIST dataset is the canonical dataset for evaluating generative models: If it doesn’t perform well with this dataset, it won’t work at scale. Our work marks the first time that a machine learning algorithm with a quantum component was applied to and succeeded at that MNIST benchmark.

The quantum-enhanced model created images that were both sharper (higher quality) and more diverse.

Using only 8 qubits on IonQ’s ion-trap quantum computer, we were able to outperform the purely classical version of the GAN used in our work. The images we generated had a higher inception score than those created by the classical-only model — that is to say, the quantum-enhanced model created images that were both sharper (higher quality) and more diverse. Having both is important: a GAN may create clear, crisp images, but if they all look the same, it won’t be useful for practical applications.

The images of handwritten digits generated using an IonQ quantum computer.

How Do GANs Work?

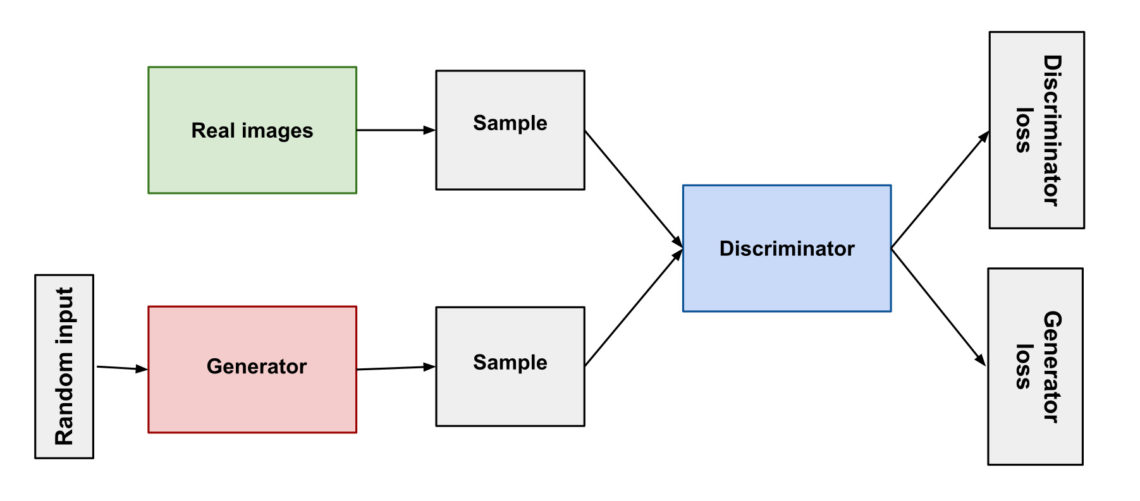

GANs typically consist of two neural networks: a generator and a discriminator. Both are trained on real data, in this case images. The generator’s role is to generate fake data that looks like the real data, while the discriminator’s job is to decide which data is real, and which is created by the generator. Using only feedback from the discriminator, the generator gradually learns to create data that the discriminator can’t distinguish from real data.

The basic structure of a GAN. Source: Google.

Traditionally, when you train a GAN, you feed the neural network inside the generator with completely random numbers. This is done because the generator requires us to kick-start its image generation process with a source of randomness, and (pseudo) random numbers are easy to come by using classical computers.

Classical computers struggle, however, with generating random numbers that follow more specific probability distributions. A random input that follows the standard Gaussian distribution (a bell curve) or the uniform distribution won’t always be the best thing to use for every problem, dataset, or stage of training. The practical effects of modifying this random component in GANs to follow more specific probability distributions have not been studied extensively in classical machine learning literature.

This is where quantum computers come in.

Enter Quantum Computing

In our research, we used a quantum computer to modify the generator input to follow a structured probability distribution. Our approach exploited the fact that a quantum state can be described as a series of probabilities. For example, if you measure the state of a single qubit, it could be 30% likely to collapse to one state and 70% likely to collapse to the other. With a quantum computer, we generally have control over these percentages, and using our quantum algorithm, we dynamically learned a complicated distribution of quantum measurements. These measurements then provided the generator with the kick-start it requires.

We found that using the quantum computer to encode the input distribution to the neural network helps to stabilize the GAN, which is otherwise notoriously unstable in training. This is due to the delicate balance required between the adversaries, such that neither can straight up outperform the other, which would cause the GAN to generate either poor-quality images or images that all look the same.

What Does This Mean for Enterprise Quantum?

Sure, most enterprises aren’t looking for a better way to generate images of handwritten digits. But that’s not the point. The MNIST benchmark is just the beginning. The point is that our work is scalable to more powerful GANs and larger, more complex datasets.

The GAN we used was off-the-shelf — you could get much better results using a more powerful and resource-intensive classical GAN. The quantum-enhanced part of our algorithm is also scalable. All the training of the quantum component of our GAN was performed on quantum hardware, which can be scaled to deliver even better results given a more powerful quantum computer.

Today’s NISQ (Noisy Intermediate Scale Quantum) devices have limited qubit counts and are highly prone to error due to gate noise. As quantum computing hardware becomes more robust, their ability to enhance generative algorithms will only grow stronger. Our research suggests quantum models could become a critical part of many large-scale generative algorithms in the future — and unlock new applications.

As I briefly touched on earlier, quantum-enhanced generative modeling could help generate novel molecular structures. A database of molecules with a desired property — binding affinity, polarity, etc. — can be used to train a GAN that then generates novel molecules with the desired properties.

To give another example, quantum-enhanced GANs could also be used to generate synthetic time series data, which can then be used to help detect anomalies. Imagine you’re creating an AI tool that can detect credit card fraud, defective products on an assembly line, or suspicious activity within a Kubernetes cluster log. There might not be enough anomalous data to train the AI tool. A quantum-enhanced GAN could potentially help generate synthetic anomalous data to augment the training dataset and improve the classification/detection model.

This research is only the beginning. As for next steps, our team is working on scaling the algorithm to be used with higher-resolution data, more powerful GANs, and more powerful quantum computers. We’re also benchmarking the algorithm and studying how the improvements we saw play out with real-world use cases and different kinds of quantum and quantum-inspired models.

We don’t yet know what configurations of quantum computers will perform better, or by how much. We do know that generative modeling will be an exciting near-term use case for quantum computing, one that can be applied to help solve real-world problems.