Cutting through the noise: AI enables high-fidelity quantum computing

Researchers led by the Institute of Scientific and Industrial Research (SANKEN) at Osaka University have trained a deep neural network to correctly determine the output state of quantum bits, despite environmental noise. The team's novel approach may allow quantum computers to become much more widely used.

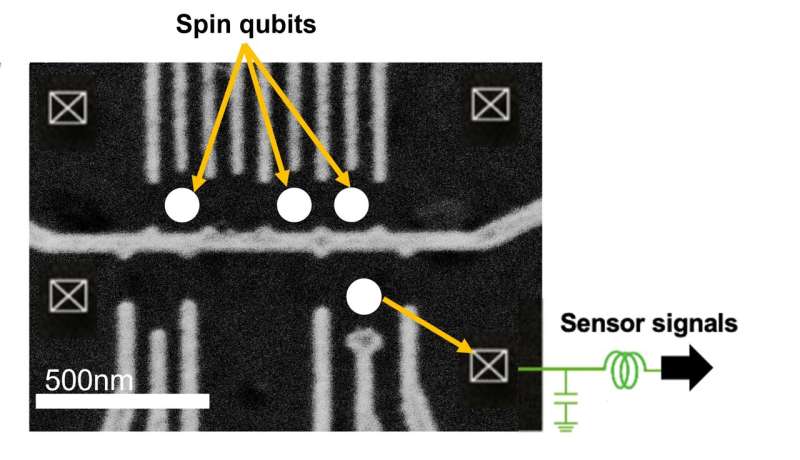

Modern computers are based on binary logic, in which each bit is constrained to be either a 1 or a 0. But thanks to the weird rules of quantum mechanics, new experimental systems can achieve increased computing power by allowing quantum bits, also called qubits, to be in "superpositions" of 1 and 0. For example, the spins of electrons confined to tiny islands called quantum dots can be oriented both up and down simultaneously. However, when the final state of a bit is read out, it reverts to the classical behavior of being one orientation or the other. To make quantum computing reliable enough for consumer use, new systems will need to be created that can accurately record the output of each qubit even if there is a lot of noise in the signal.

Now, a team of scientists led by SANKEN used a machine learning method called a deep neural network to discern the signal created by the spin orientation of electrons on quantum dots. "We developed a classifier based on deep neural network to precisely measure a qubit state even with noisy signals," co-author Takafumi Fujita explains.

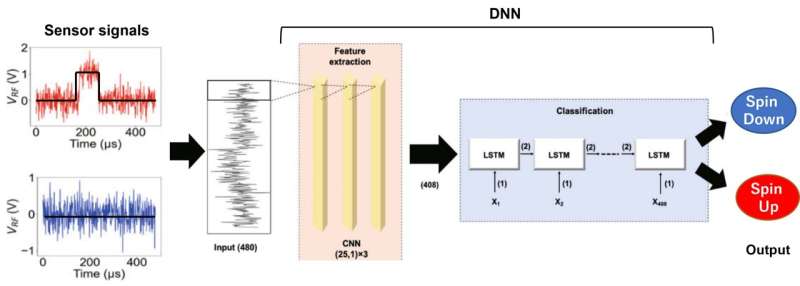

In the experimental system, only electrons with a particular spin orientation can leave a quantum dot. When this happens, a temporary "blip" of increased voltage is created. The team trained the machine learning algorithm to pick out these signals from among the noise. The deep neural network they used had a convolutional neural network to identify the important signal features, combined with a recurrent neural network to monitor the time-series data.

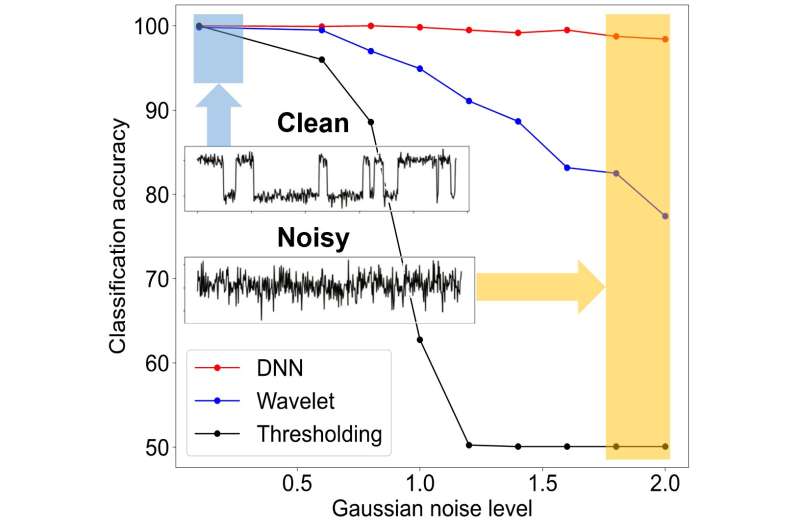

"Our approach simplified the learning process for adapting to strong interference that could vary based on the situation," senior author Akira Oiwa says. The team first tested the robustness of the classifier by adding simulated noise and drift. Then, they trained the algorithm to work with actual data from an array of quantum dots, and achieved accuracy rates over 95%. The results of this research may allow for the high-fidelity measurement of large-scale arrays of qubits in future quantum computers.

More information: Yuta Matsumoto et al, Noise-robust classification of single-shot electron spin readouts using a deep neural network, npj Quantum Information (2021). DOI: 10.1038/s41534-021-00470-7

Provided by Osaka University