Researchers develop a computer that's fooled by optical illusions

Seeing like we do has big implications for AI.

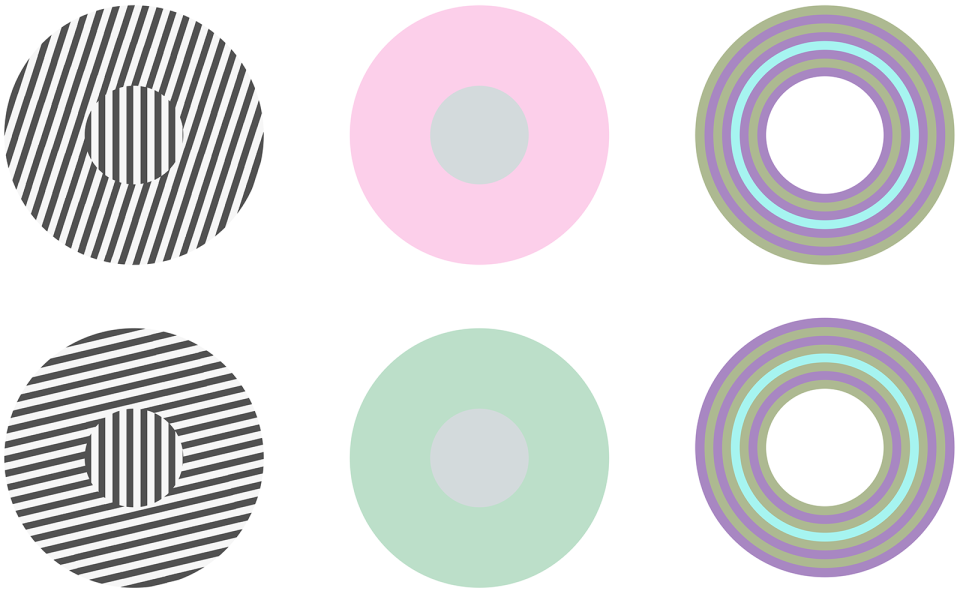

Say you're staring at the image of a small circle in the center of a larger circle: The larger one looks green, but the smaller one appears gray. Except your friend looks at the same image and sees another green circle. So is it green or gray? It can be maddening and fun to try to decipher what is real and what is not. In this instance, your brain is processing a type of optical illusion, a phenomenon where your visual perception is shaped by the surrounding context of what you are looking at.

Thomas Serre, an associate professor in the cognitive, linguistic and psychological sciences department at Brown University, said he thinks these kinds of illusions, where reality and what you see don't line up, are a "feature and not a bug" of your brain. Now Serre and his team have programmed a computer system to "see" these same kind of optical illusions, publishing their research last month in the journal Psychological Review.

This research is unique in that it could lead to sophisticated computer-vision systems that process images more like your brain does.

"We were trying to build a computational model that was constrained by the anatomical data of the human visual cortex," Serre told Engadget. "It was surprising, at least for me, to see how far we were able to go with a single model to find out in fact how many illusions our system could register."

When you look at an image, information about what you see courses from your retina down circuits of neurons, reaching the visual cortex of your brain, which processes nerve information from your eyes. The cortical neurons bounce information back and forth between themselves, tweaking each other's responses when they come across a stimulus like an illusion.

When the team presented this artificial brain with context-dependent optical illusions -- think the double-circle example -- it found that the computerized neurons responded in the same way as human neurons.

In the past, deep learning work in artificial vision hasn't come close to replicating this neural-feedback loop that happens when your brain comes across something like an illusion. Usually, these algorithms just push information forward in a straight line without adjusting to stimuli that deviate from the norm. This is what makes Serre's work stand out.

Artificial vision has been utilized in everything from facial recognition to cancer imaging to driverless cars. The closer computers approximate the way the brain processes images, the better they could be at carrying out complex tasks like spotting a tumor or ensuring a safer drive in an autonomous car. For instance, Serre said improved artificial vision could make it harder to "fool" a self-driving car, avoiding disasters like mistaking a mark on a stop sign for one that reads "65 mph."

How much does artificial vision lag behind human sight? Ruth Rosenholtz, a principal research scientist at the Department of Brain and Cognitive Sciences at the Massachusetts Institute of Technology, said that due to improved machine deep learning over time, artificial vision has gotten quite good at carrying out tasks it is trained for. But Rosenholtz, who is not affiliated with Serre's research, added that it trails human vision in a few key ways.

"The errors that artificial vision systems make are completely different from the errors that human vision makes," Rosenholtz wrote in an email to Engadget. "This is important both because it means that the artificial vision systems are fragile, and because the errors that human vision systems make are not random."

She agrees with Serre that illusions should be viewed as a common feature of human vision. Rosenholtz asserted that making the "same mistakes as humans" is probably necessary for a computer to have a high-functioning visual system.

For Rosenholtz, this kind of research is important because it has implications beyond optical illusions. As AI and computers increasingly approximate human functions and behavior, being able to understand and then course correct their humanlike flaws like seeing an optical illusion can make for better machines.

That being said, this is still in its early stages, Serre admitted. He and his lab are continuing to fine-tune their work and will be publishing another paper in December. They have been applying this neuroscience model to a machine learning one, showing how their computer could perform various vision-based tasks like identifying contours or tracing and identifying the boundary around an object. Think of how you are able to easily define the edges and lines that make up the square shape of a picture frame when you look at it.

Rosenholtz added that understanding how computers "see" can also teach us about human vision and the human brain.

"There's a symbiotic relationship between computational vision models and human vision research," she wrote. Research into human vision provides insights into what is behind a high-functioning visual system. "Errors" like optical illusions are helpful because "revealing the 'errors' made by the visual system" can sometimes tell us more about how vision works than looking just at its successes.

Serre agreed.

"We are at a turning point in neuroscience and computer vision," he said. "There's lots of cross-pollination between the two fields. Computer science is always inspiring neuroscience, and neuroscience is inspiring computer science. Computers really are the best metaphor for what brains do."