By Andy May

In my last post, on Scafetta’s new millennial temperature reconstruction, I included the following sentence that caused a lot of controversy and discussion in the comments:

“The model shown uses a computed anthropogenic input based on the CMIP5 models, but while they use an assumed climate sensitivity to CO2 (ECS) of ~3°C, Scafetta uses 1.5°C/2xCO2 to accommodate his estimate of natural forcings.”

I thought in the context of the post, the meaning was clear. But, Nick Stokes, and others, thought I meant that ECS was an input parameter to the CMIP5 climate models. This is not true, ECS is computed from the model output. If you pull the above quote out of the post and view it in isolation, it can be interpreted that way, so I changed it to the following which is unambiguous on the point.

“The model shown uses a computed anthropogenic input based on the CMIP5 models, but while they use an assumed climate sensitivity to CO2 (ECS computed from the CMIP5 ensemble model mean) of ~3°C, Scafetta uses 1.5°C/2xCO2 to accommodate his estimate of natural forcings.”

Then we received criticism about the computation of the ensemble model mean ECS, some said the IPCC did not compute an ensemble mean of ECS. This is nonsense, they compute it in AR5 (IPCC, 2013, p. 818). A portion of the table is shown below as Figure 1.

Figure 1. A portion of IPCC AR5 WG1 Table 9.5, page 818. The average ECS of the CMIP5 models is shown at the bottom as 3.2 degrees.

As you can see in Figure 2, most of the models greatly overestimate warming in the mid to upper tropical troposphere. A pressure of 300 hPa occurs at about 30,000 feet or 10 km altitude and 200 hPa is at about 38,000 feet or 12 km altitude. The top of the troposphere is the tropopause, and in the tropics, it is usually between 150 hPa or 14 km and 70 hPa or 18 km.

Figure 2. CMIP5 models versus weather balloon observations in green in the mid- to upper troposphere. The details of why the models fail statistically can be seen in a 2018 paper by McKitrick and Christy here. All model runs shown use historical forcing to 2006 and RCP 4.5 after then.

The purple line in Figure 2 that tracks the weather balloon observations (heavy green line), is the Russian INM-CM4 model. As we can see, INM-CM4 is the only model that matches the weather balloon observations reasonably well, yet it is an outlier among the other CMIP5 models. Because it is an outlier, it is often ignored. In Figure 1 we can see that if ECS is computed from the INM-CM4 output, we get 2.1°C/2xCO2 (degrees warming due to doubling the CO2 concentration). Yet, while an ECS of 2.1 is clearly matching observations since 1979, the model average is 3.2. It is significant, literally, that INM-CM4 is one of the few models that passes the statistical test used in McKitrick and Christy, 2018 (see their Table 2). This is why I used the word “assumed.” The evidence clearly says 2.1, so 3.2 must be assumed. ECS is not an input to the models, but tuning the models changes ECS and the modelers closely watch the value when tuning their models (Wyser, Noije, Yang, Hardenberg, & Declan O’Donnell, 2020).

McKitrick and Christy chose the tropical middle to upper troposphere for their comparison very carefully and deliberately (McKitrick & Christy, 2018). This part of the atmosphere is sometimes called the tropospheric “hot spot” (See Figure 3). Basic physics and the IPCC climate models suggest that, if greenhouse gases (GHGs) are causing the atmosphere to warm, this part of the atmosphere should warm faster than the surface. Dr. William Happer has estimated that the rate of lower to middle tropospheric warming should be about 1.2 times the warming at the surface.

The reason is simple. If GHGs are causing the surface to warm, evaporation will increase on the ocean surface. Evaporation and convection are the main mechanism for cooling the surface because the lower atmosphere is nearly opaque to most infrared radiation. The evaporated water vapor carries a lot of latent heat with it as it rises in the atmosphere. The water vapor must rise because it has a lower density than dry air.

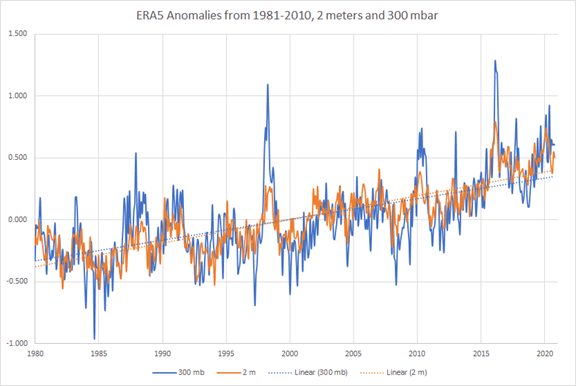

As it rises through the lower atmosphere, the air cools and eventually it reaches a height where it condenses to liquid water or ice (the local cloud height). This causes a tremendous release of infrared radiation, some of this radiation warms the surrounding air and some goes to outer space. It is this release of “heat” that is supposed to warm the middle troposphere. Does the “hot spot” exist? Theory says it should, if GHGs are warming the surface significantly. But proof has been elusive. In Figure 4 we plot the surface temperature from the ERA5 weather reanalysis versus the reanalysis temperature at 300 mb (also 300 hPa or about 10 km). The curves below are for most of the globe, the data is from the KNMI climate explorer. I tend to trust reanalysis data, after all it is created after the fact and compared to thousands of observations around the globe. This plot is one example, you can make others easily on the site.

Figure 4. ERA5 weather reanalysis temperatures from the surface (2 meters) in orange and at 300 mbar (10 km). We expect a faster rate of warming at 300 mbar than at the surface, but, instead, the rates are almost the same, with the surface rate slightly higher. The El Niños have a higher rate at 300 mbar, which makes sense.

Surface ocean warming should cause a “hot spot.” We see this in every El Niño in Figure 4. Surface warming due to GHGs should do the same thing, but this is not seen in Figure 4. As I stated above, the models have been tuned to produce an assumed ECS.

Discussion

As a former petrophysical computer modeler, I’m surprised that CMIP5 and the IPCC average results from different models. This is very odd. Standard practice is to examine the results from several models and compare them to observations, this is what John Christy has done in Figure 2. Other comparisons are possible, but his is carefully done to highlight the differences. The spread in model results is huge, some go off scale in 2010. This is not a dataset one should average.

When I was a computer modeler, we would choose one model that appeared to be the best and average multiple runs from just that model. We never averaged runs from different models, it makes no sense. They are incompatible. I still think choosing one model is the “best practice.” I’ve not seen an explanation for why the CMIP5 produces an “ensemble mean.” It seems to be an admission that they have no idea what is going on, if they did they would choose the best model. I suspect it is a political solution for a scientific problem.

Also, the results (see Figures 1 and 2) suggest that the models are out of phase with one another. Figure 2 is a pile of spaghetti. Since it is obvious that natural variability is cyclical (see (Wyatt & Curry, 2014), (Scafetta, 2021), (Scafetta, 2013), and Javier’s posts here and here), this odd practice of averaging out-of-phase model results completely wipes out natural variability and makes it appear nature plays no role in climate. Once you do that, you have no valid way of computing the human impact. They have designed a method that guarantees the computation of a large ECS. Sad.

Works Cited

IPCC. (2013). In T. Stocker, D. Qin, G.-K. Plattner, M. Tignor, S. Allen, J. Boschung, . . . P. Midgley, Climate Change 2013: The Physical Science Basis. Contribution of Working Group I to the Fifth Assessment Report of the Intergovernmental Panel on Climate Change. Cambridge: Cambridge University Press. Retrieved from https://www.ipcc.ch/pdf/assessment-report/ar5/wg1/WG1AR5_SPM_FINAL.pdf

McKitrick, R. & Christy, J., 2018, A Test of the Tropical 200- to 300-hPa Warming Rate in Climate Models, Earth and Space Science, 5:9, p. 529-536

Scafetta, N. (2021, January 17). Climate Dynamics. Retrieved from https://doi.org/10.1007/s00382-021-05626-x

Scafetta, N. 2013, “Discussion on climate oscillations: CMIP5 general circulation models versus a semi-empirical harmonic model based on astronomical cycles, Earth-Science Reviews, 126(321-357).

Wyatt, M., & Curry, J. (2014, May). Role for Eurasian Arctic shelf sea ice in a secularly varying hemispheric climate signal during the 20th century. Climate Dynamics, 42(9-10), 2763-2782. Retrieved from https://link.springer.com/article/10.1007/s00382-013-1950-2#page-1

Wyser, K., Noije, T. v., Yang, S., Hardenberg, J. v., & Declan O’Donnell, a. R. (2020). On the increased climate sensitivity in the EC-Earth model from CMIP5 to CMIP6. Geosci. Model Dev., 13, 3465-3474.

Oh I’ll tell you the problem!

http://phzoe.com/2021/02/06/greenhouse-gases-are-coolants/

This is what I was taught back in the stone age (’50s) when CO2 was 280 ppm. Such numbers you never forget.

Temperature is regulated through pressure by gravity anyway, the real question is the original reason for putting thermometers at airports… increasing the temperature over a runway decreases the air density without any meaningful change in pressure… it literally maintains the same amount of energy per volume. How much does the density change affect the specific heat of a cubic centimeter of air per calorie input?

All the pseudoscience done by the IPCC morons assumed a fixed mass per volume. They can’t even pass grade school science class.

Zoe, go away with your click bait.

Hans in this case Zoe is correct. CO2 will not cause warming. It can’t.

Using standard physics information there are about 1.07E16 CO2 molecules in a cubic meter of air. Using Planck constant a m^3 of air has about .0001 J caused by CO2 emissions.

This warming people speak of is not mentioned in specific heat tables, the Shomate equation, nor the NIST data sheet of CO2.

The forcing equation is BS as it neglects the increased mass of the carbon atoms.

“… tuning the models changes ECS and the modelers closely watch the value when tuning their models.”

and, tuning to expectation = pseudoscience = Cargo-cult science.

GCMs are Cargo-cult science, as in, “Those finicky cargo planes will start landing if we can just get our runway layout just right. Send more money so we can keep making the adjustments.”

They average it out because it is a consensus science, not empirical.

Settled science 😉

Biden goes full on Solyndra.. https://www.google.com/amp/s/qz.com/1968184/biden-could-prove-the-solyndra-scandal-wasnt-a-failure/amp/

Oh, good grief!

“ Eichacker says. “The costs of climate change are incomprehensibly large [and] the downside of losing on a few more Solyndras pales in comparison to not trying to do more.” In other words, better to suffer through a Solyndra than miss out on a Tesla.”

This is the problem now. Leftist driving energy policy 🙁

Yes, the cost of changing the weather is incomprehensibly large.

I still can’t work on what would be wrong with “missing out on a Tesla”. There’s little remarkable with one more electric cart which will inevitably need fossil fuels to keep it on the road.

All EV’s need fossil fuels to keep them on the road

Exactly. Be heck of alot cheaper to buy & putter around in a little electric golf cart if that’s what you feel compelled to do.

Just get the government OUT of venture capitalism. Let those who want to risk their capital risk it, and reap the success or failure from their ventures. This is NO place for government!

It astounds me why so many people don’t understand that simple idea. If people want to risk their, good luck to them. I just don’twant government to risk my money.

A major part of the problem is the large number of people who can’t conceive of ANYTHING being done without government involvement.

The ‘Nanny State’ is an illusion, though, but an illusion that an indoctrinated public believes is giving them value for their money. The reality is; they just make themselves and their friends wealthier while letting the cities turn into shit-holes. Socialism has been a failure everywhere and in every way it has been tried.

This is a very sanitized and spun version of the Solyndra debacle that blames the China boogieman—the real causes were the idiotic module design that doomed the idea on the drawing board and the technical incompetence of the DoE program managers who thought it had a chance of success.

“I suspect it is a political solution for a scientific problem.”

It is that for sure, but also follow the money. Once someone like the IPCC chooses the winner, everybody else can pack it up and go home. Do you know how much money climate scientists and universities/colleges/ngo’s will lose when this happens? Ain’t going to happen. Besides the fact that it is an automatic propaganda winner to have multiple “models” all showing somewhat the same thing.

When I hear the meme, “Follow the money,” I can’t help but think of Hunter Biden and the old joke that “Cocaine is God’s way of telling you that you have too much money.”

As for Andy’s question about, why does IPCC average and ensemble of models that all (or most are clearly) wrong? He does touch on the fact this is political solution, a solution of inclusion. And by including all international players (model teams) who play along with the scam, it gains political support from team’s governments wanting to look like they are “following science.” This is done to garner a “consensus” and to wave it in front of ignorant reporters and politicians, and thus dupe the public with pseudoscience, as the shakedown continues.

Consensus is the realm of politics and religion, of which climate change is both. Climate modeling and by extension most of climate science that seeks to calculate an ECS with that crap is just simply junk science through and through.

The CMIP process IS consensus science at work. Nothing more.

And as the late Michael Crichton said, “If it’s consensus, it’s not science. If it’s science, it’s not consensus.”

Since the IPCC was designed as political solution to provide the rationale for imposing climate policies and making it look like science.

One could just as easily get out one’s deluxe box of coloured pencils and draw a series of squiggles, between 0C and two temperature extremes (say 1.5C and 4.5C) over the next 30 to 40 years and call it science. It would be indistinguishable and just as accurate as the GCM generated image.

Just as inaccurate as the …

My point was: if random scribbles produced with a box of coloured pencils are indistinguishable from the methodical output of state of the art climate models, what does that say about their scientific value, never mind their predictive value?

The question I have is why do they get away with showing actual temperature history compared to the spaghetti chart which include all the RCPs. We are clearly no where near any of the RCP scenarios where GHGs are significantly reduced; we are on and have been on, RCP8.5, and so says the National Academy of Sciences.

Tom, The model runs in Figure 2 use historical forcing to 2006 and RCP 4.5 after 2006. I’ve changed the caption to reflect that.

Edward Norton Lorenz (May 23, 1917 – April 16, 2008) was an American mathematician and meteorologist who established the theoretical basis of weather and climate predictability, as well as the basis for computer-aided atmospheric physics and meteorology.

He discovered that the climate is a chaotic system. That means it is so sensitive to initial conditions that it can’t meaningfully be predicted. Given that, and given that nobody has refuted his observation, why do people continue to write climate models in the conventional manner?

Shhhhhh, stop interfering with sciency talk by recalling facts.

Follow the money.

He discovered that weather is chaotic. The climate of a region not so much.

Evidence?

I would have thought it was self evident.

So, Loy-dumb HAS NO EVIDENCE……

AS ALWAYS

You “would” have thought…… but you are NOT CAPABLE OF IT.

Translation: Loydo has no evidence but can’t bring himself to admit it.

That’s always the sign that you’re about to get something wildly wrong … you “thought”. The only “self evident” thing here is your ignorance.

So self-evident you can’t actually produce any?

Exactly, commieBob, always ask the neo-marxists questions. They hate that because they never have rational answers.

Another Loy-dumb zero-evidence fairy-tale.

How pointless and totally irrelevant.

I read your comments here for a long time, and your comments show me, that you contradict yourself, because your local head climate seems to be very chaotic. 😀

SCNR

Climate is the integral of weather., i.e. the temperature profile. If weather is chaotic then so is the climate.

Mr. do: “He discovered that weather is chaotic. The climate of a region not so much.”

I’ve read elsewhere that climate of a region is basically thirty years of weather, averaged. So take chaos, average it, and you… what… lose the chaos??!! So you take chaotic, random numbers between 2 and 12, average them and you may get 7. This does NOT mean every throw thereafter comes up 7. Play craps and find out the hard way.

“Climate is a coupled, non-linear, chaotic system” (IPCC). Climate is regional, by definition.

You’ve got some ‘splaining to do, son. Please forward your falsifications of Lorenz.

You’ve got to be careful what you define your regions as being. North Dakota, Kansas and Oklahoma are all part of the central plains region but have vastly different climates. Look at the agricultural “growing regions” or growing season maps and you’ll get a lot better definitions of climate regions, at least in the US. Kansas City and Lincoln are pretty close together but have big differences in their heating and cooling degree-days. Yet St Paul and Denver are quite distant with very similar cooling and heating degree days.

Considering climate to be local has always seemed to work for me.

That was supposed to be my point, such as it was. I was alluding to my high school definition of climate (60+ years ago) … “climate is the average weather at a particular location over time.” In my province we have numerous climates, from West Coast Marine to Mediterranean, Alpine, Tundra, Taiga and so on. Each has its own particular set of causes. Trying to pretend there could be some average climate for British Columbia is just silly. Applying the that standard to the whole planet makes a mockery of global “climate change” as a concept.

Thanks for the clarification. I agree with you 100%

Reasons! That’s why.

Post modern science don’t need no logic.

“…don’t need no stinkin’ logic…

So that’s why climate Scientologists call their work projections AKA soothsaying

The spaghetti graph does resemble goat entrails.

Andy, very nice follow up post. My complements.

A key question you implicitly raise is how does Russian climate model INM-CM4 differ from the rest in order to track observations?

I am going from memory of a long analysis of that question by Ron Klutz of Canada (his blog is IIRC Science Matters) some years ago.

These comments may allow newer WUWT readers to focus on the BIG climate model issues, and research for themselves the papers and data underlying them.

As just one example taken from the Climate chapter of TAOT, Andrew Dessler’s second paper in 2010 purported to find positive cloud feedback by comparing clear sky to all sky satellite TOA IR. This was promptly amplified by NASA on their website. The fundamental problem is, his data is an almost perfect shotgun pattern with an r^2 of 0.02. NOTHING—NO TREND. And he should have known that from the gitgo if he had any stats competence at all. That paper arguably comprises academic misconduct.

And as icing on this somewhat mathematical cake, IF you assume cloud feedback is 0, and that WVF is half of explicit AR4 ( which was 2.4 from 1.2) because of the rainfall discrepancy, and then you plug that net net Bode ~0.25 into Lindzen’s curve based on zero feedback 1.2C ECS, then ECS is ~1.6. Which is what Lewis and Curry concluded as the most likely estimate in their series of energy budget method ECS papers. QED.

Thanks Rud. Good analysis. How did we find ourselves in the position of having to prove the alarmists are wrong. We believe that climate is controlled by nature, that is the default position! Now we have to prove human’s do not control the climate, the sci-fi position. Weird.

It has roots in the sociopolitical complex and uncivil rights, where there is a presumption of guilt until proven otherwise. A profitable model that has, surprising many, transferred to science.

You are trying to prove the shaman’s visions are not reality. Has that ever worked for anyone, anywhere, at any time?

Climate alarmism has turned science upside down.

Yes, a good analysis.

Andy, would you have a link to a higher resolution copy of fig.2? I looked on the linked paper in your piece but couldn’t see it.

Thanks.

Alastair, click on Figure 2 and it will appear in full resolution.

Thanks Andy but either I or my computer (or both) must be pretty dumb…clicking on the image (in Firefox) only gets me a 45Mb copy and it’s hard to read the model numbers on the right. Is there a better way? Or am I doing something wrong…or is 45Mb full resolution?

The mysteries of the internet. I can’t click on it either, ususally you can. Try this link:

Click on the image above, that should show it in full resolution.

Thanks for this but it is still only 112Kb and still a bit hard to read the model names. However, many thanks for your private message with the best solution.

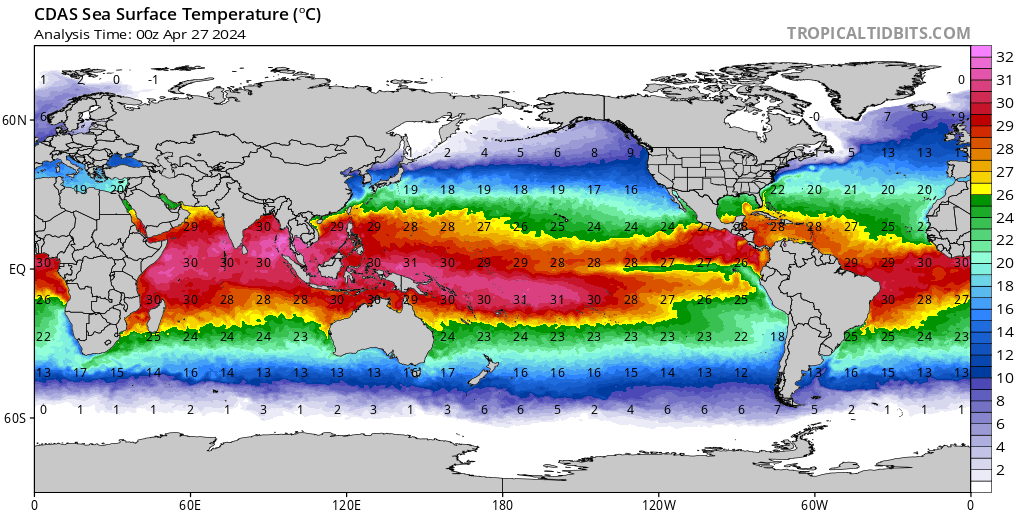

The INM model is much more realistic with ocean warm pool temperature:

http://climexp.knmi.nl/data/icmip5_tas_Amon_inmcm4_rcp85_160-179E_-5-5N_n_+++.png

It gets up to 302K in the Nino4 region. It is low now and needs to be at the set point of 30C to be correct.

By contrast, the GISS model achieves the physically impossible:

http://climexp.knmi.nl/data/icmip5_tas_Amon_GISS-E2-H_p3_rcp85_160-179E_-5-5N_n_+++.png

With the tropical warm pool reaching 307K. That is something that cannot occur on planet Earth in the hundred years or next hundred million.

Just to compare with reality, albeit over a shorter time frame:

http://climexp.knmi.nl/data/itao_sst_160-179E_-5-5N_n.png

It is quite clear the temperature gets controlled at 30C. Some overshoot but the regulation is quite tight.

Real time data from 0N, 156E when it was in the warm pool shows just how well the thermostat works.

This stable 30C figure stems from the temperature V water vapour curve which rapidly increases the rate of evaporation which occurs at constant temperature thus at a Planck sensitivity coefficient of zero and hence a strong NEGATIVE feedback to temperature increase. It is the explanation why the oceans never get above around 32C in spite of millions of years of relentless solar radiation.

The computer models do not incorporate this factor into their programs as a positive water feedback to the Greenhouse Effect is being FALSELY assumed.

This definitely needs to be put right.

It is not solely the increasing water vapour. The persistence of the cloud increases rapidly once the surface temperature exceeds 28C. At 32C the radiation balance above the surface goes negative. If the surface were internally heated to 34C then there would never be clear sky. Clouds would persist indefinitely.

Also the reason it controls at 30C and not 32C in the warm pool is the result of convergence of moist air from adjacent cooler zones that have not triggered cloudburst. Pools at 30C get about twice the rain that would result from the local long wave emission while the adjacent areas have about half the rain. If there is no convergence then the temperature reaches almost 32C.

In July and August, the Persian Gulf is the warmest sea surface on the globe. It can reach 35C. The rate of evaporation is so great that it creates a strong current through the Strait of Hormuz. The other unique feature of the Persian Gulf is that it rarely experiences cloudburst and is the only sub-tropical water above 28C that has never experienced a cyclone. Convective instability does not occur in the Gulf because the convective potential does not develop due to the dry northerly winds. The humidity near ground level is high but there is no level of free convection to enable instability.

Except cloud feedback is negative, so ECS is even less.

Possibly. But I do not have sufficient data to prove it so. Zero is sufficiently controversial, and still very provable.

The revised climate model for 7 Feb 2021:

Average Global Temperature = {30 + (-2)}/2 = 14C

So simple it makes me smile about all the religious nonsense that gets dragged into Climate Change.

Not to Nick. He drunk the Koolaid and is incapable of changing his mind.

Yes, if they knew what they were doing by now they would have only one model, the one that best fits the real climate data. Having so many models in the first place means that a lot of guesswork was involved. Averaging different errant models is nonsense. It would appear that politics and not science has driving their effort. So all conclusions based on the errant models is nothing but nonsense and not science. So it is the Russian model that is the only one that seems to be doing at least a reasonable job predicting our global climate. I wonder how good this model is at back constructing the past. Has any parameterization been used in the Russian model. What they should now as discarding all ot the errant models and concentrate on making variations to the Russian model to see if they can achieve a better fit. How exactly is CO2 based warming handled in the Russian model? It is my understanding that others have produced models but not climate simulations that have reasonably predicted today’s climate that do not make use of any CO2 based warming at all.

“…..Averaging different errant models is nonsense……”

When a hurricane crosses the mid Atlantic, weathermen show the various model storm track predictions followed immediately by the average track of the averaged ensemble. It is a mefhod to actually avoid responsibility for the correctness of the prediction. As landfall approaches, the various models have been fed the latest information and generally converge on a landfall that is close to the real landfall.

Climate models seem to be missing the convergence information.

No. That is different.

The hurricane tracking is using the same model – the same understanding – and just running it multiple times with slightly different initial inputs. This is reasonable as we cannot measure all inputs perfectly. The range of outputs show the reasonable possibilities from our best understanding.

Climate science has no way of distinguishing our best understanding. So they take different models and average the outputs. A model that says x leads to more rain is averaged with a model that says x leads to less rain. But only one of those models can right. Or at most one.

It doesn’t give the range of plausible outcomes. It shows meaningless gibberish.

IIRC, Gavin Schmidt said the average of the models gives the right answer.

Say what?

It is obvious that Gavin Schmidt does not know what he is talking about.

Answers to your questions about INMCM5:

https://rclutz.wordpress.com/2018/11/16/latest-results-from-first-class-climate-model-inmcm5/

Why ia everyone hung up on IPCC junk science, when NASA knows the truth:

http://phzoe.com/2021/02/06/greenhouse-gases-are-coolants/

Even the IPCC knows the truth and have already stated it. Ottmar Edenhoffer has clearly stated that the purpose is redistribution of wealth and nothing more.They just publish The Summary for Policymakers to give politicians something to defraud the taxpayers with.

s/policymakers/dictators/

Andy,

Thanks for what you have been doing. The shame is that not one single Democrat will read your analyses and understand what you’ve been saying. Not one.Not a single one. They are too tied up in censorship and the money they can grub from CAGW!

You have power to change that. Do so.

How well the models do at predicting seems to depend a lot on which RCP/SSP you chose. Since the beginning of CMIPs, is there any basis for arguing that we have not followed the worst case scenario going forward. If so, the the models seems drastically off. This always gets fuzzed up by showing the spaghetti charts which include all of the scenarios. Can you or anyone comment, and hopefully with charts. Thanks.

Nice post Andy – very well written. Slightly off topic, but I recall reading in the past, and was wondering if you could verify, that the GCMs need to assume an atmospheric viscosity on the order of that of molasses to prevent the model results from exploding. If this is the case, and given McKitrick and Cristy’s work above, in addition to Pat Frank’s work on error propagation, how is it even remotely possible that these model’s have any scientific standing?

Frank, I cannot verify that. Maybe someone else can. Each of the models has scientific standing, but the average of them does not. You might as well average the flight path of birds.

a linear progression is not a model

Good article, Andy.

All of these models are based on the false premise that the mean downwelling IR from increasing opaqueness of more gases controls the surface temperature and upwelling IR.

Andy

Thanks for the article.

It is clear the models are wrong.

And

It is bs to average them.

But initial inaccuracy doesn’t mean a model doesn’t have future value.

( nearest to the pin doesn’t imply the best golfer)

In your opinion which of the 15 models are total duds to be discarded and which ones get it sort of right and should be progressed.

Thanks in advance

Those that predict observations reasonably well should be studied. Those that don’t should be discarded. Those that remain can be studied individually, but don’t average them, that is a useless waste of time and money.

We have almost 20 years of decent surface temperature measurements, use those. We also have 40 years of decent satellite measurements. We can see which models are useful and which are BS.

Seems models are just opinions, the opinion of the modeler. I wish the bas**rds would keep their opinions to themselves.

eyesonu,

It is true that the model writers opinions and many of his subjective decisions make it into the model he writes, it is unavoidable.

Re: Figure (table) 1, from IPCC:

The last two rows are odd, “Model mean” and “90% uncertainty”:

(3.7) (3.4) (3.2) (1.8)

(0.8) (0.8) (1.3) (0.6)

I calculated the means and standard deviations from the data in the table as:

(3.71) (3.53) (3.14) (1.79)

(0.495) (0.590) (0.934) (0.340)

The means can be explained as rounding differences, but what are they calling “uncertainty”? Calculating the ratio of the uncertainties to the standard deviations:

(1.62) (1.36) (1.39) (1.76)

Corresponding 90% Student’s t values for n-1 d.f. are:

(1.48) (1.36) (1.36) (1.35) — one-sided

(2.02) (1.80) (1.80) (1.76) — two-sided

Note the inconsistencies. Whatever they are calling “uncertainty” doesn’t follow the GUM for expanded uncertainty, which uses 2 as the standard coverage factor from combined to expanded (two-sided t = 1.96 for 95% coverage). Also, each individual value in the table should have its own combined uncertainty that includes all sources of uncertainty, not just standard deviation.

A histogram of TCR (has the most number of points of the four columns) is non-normal with two peaks.

Asking climate scientist to do statistics is a fool’s errand. They would rather make up techniques and claim their circular reasoning is proof.

Monte Carlo, All the rows and columns are not shown. As I say in the post, that is just part of the table. go to AR5 WG1 page 818 and you can see the whole table. It was too big to reproduce it all.

Ah, ok, will do; I’ve never bothered to wade through any of them.

I pulled out the ECS and TCR columns from the full table, which have the least numbers of missing entries. The means and s.d. to three digits agree:

ECS: 3.22, 0.801

TCR: 1.83, 0.373

As suspected, the “uncertainty” row is merely the s.d. expanded by Student’s t for 90%, two-sided, infinite d.f. = 1.645, expressed to one digit after the decimal.

The TCR histogram is roughly normal, but the ECS histogram is tri-modal, with peaks at ~2.7 (7 models), ~4 (5 models), and ~4.7 (two models).

Scanning through Chapter 9, the word uncertainty is used in many places for the myriad of model inputs, but only in a non-numeric, hand-waving manner. The GUM is not one of the references; formal measurement uncertainty is never discussed.

Thanks! Good to know. Obviously, averaging the computed ECS values is even sillier, if they are not normally distributed.

Remember that the IPCC is an international committee that has to treat each member equally (otherwise they might not participate); without an objective, numeric way of differentiating between individual models, they take the easy way out.

And yes, the meaninglessness of the models begins at calling the average global surface temperature “the climate”.

Keep in mind that the IPCC’s “Summary for Policy Makers” is the most important part of the entire regular IPCC reports.

A “summary” prepared by politicians and is not based upon the alleged facts. Many of the IPCC alleged facts are contrary to the political demands in the “Summary for Policy Makers”.

The Problem with climate models is that:

1) They assume CO2 is the most significant variable

2) They assume CO2 and Temperatures are linearly related

3) They model CO2 and not W/M^2

4) CO2 and W/M^2 shows a log decay, not a linear relationship

5) A single cloudy day can negate months of W/M^2 contribution of CO2

6) CO2 and LWIR between 13 and 18 microns won’t warm water

7) The oceans control the climate, what warms the oceans controls the global climate

8) CO2 doesn’t warm the oceans

The oceans are thermostatically controlled; at least while the Atlantic can make it to the 30C upper control point.

There is no “Greenhouse Effect”. CO2 makes no difference at all.

They likely do it for several reasons. None of them honest.

A) Using all of the models, at least the alarmist models, seems to be inclusive.

• Especially when so many folks on the alarmist sides take offense when their ideas are not imperatively broadcast as absolute.

B) None of the models match observations AND give the alarmists what they desire. But, multi-model ensembles seem close to their desires.

C) There is a phrase, “Baffle them with bullshit”, often phrased as “bury them with nonsense and paperwork”.

Both are meant to discourage honest reviews.

It seems a bit odd that the only model to match observations reasonably well is labelled an outlier, and is therefore ignored.

The dozens of wrong models aren’t useless though, are they? Their input parameters can be looked upon with suspicion, and may provide good info on how the climate _doesn’t_ work.

Andy,

You said, “As we can see, INM-CM4 is the only model that matches the weather balloon observations reasonably well, yet it is an outlier among the other CMIP5 models.”

Yes, it is the only model whose general trend is close to the balloon temperatures. Strangely, however, it seems to be out of phase with the balloon temperatures!

You also remarked, “… we would choose one model that appeared to be the best and average multiple runs from just that model. We never averaged runs from different models, it makes no sense.”

Logically, there is only one best model. Averaging the best model with the ones that have less skill in forecasting just reduces the accuracy of the forecast!

Andy. You write like this: “Evaporation and convection are the main mechanism for cooling the surface because the lower atmosphere is nearly opaque to most infrared radiation. The evaporated water vapor carries a lot of latent heat with it as it rises in the atmosphere.. … This causes a tremendous release of infrared radiation, some of this radiation warms the surrounding air and some goes to outer space.”

As you can see, I have underlined three expressions that are not correct. I refer to the energy flux numbers of the Earth’s energy balance which are practically identical in all major presentations.

1) Evaporation and convection, which are called “Latent heating” (91 W/m2) and “Sensible heating (24 W/m2) are essential in the cooling mechanism of the Earth’s surface but not the most important one. The main mechanism is the infrared radiation emitted by the surface (395 W/m2) according to Planck’s law. Do you approve this energy flux or not?

2) The lower troposphere is not opaque to most infrared radiation. The observed OLR (Outgoing Longwave Radiation) into space is 240 W/m2. This is possible only because 395 – 240 = 155 W/m2 has been absorbed by greenhouse gases and clouds? Do you approve this absorption, or do you think that there is no GH effect at all?

3) You are right that latent heat and sensible heat increase the temperature of the atmosphere and it means that this energy must be radiated as infrared radiation because otherwise, the temperature of the atmosphere would increase all the time. Where is this radiation going to? There is quite a simple answer. The Earth is in energy balance. Incoming solar insolation is 240 W/m2 and OLR is the same as shown by NASA’s CERES satellites. This is obligatory according to physical laws, and it has been validated by observations. Because OLR is that 240 W/m2, it is not possible that latent heating and sensible heating is causing extra infrared radiation into space, not even1 W/m2. If it would be so, what is the place, where the rest of 240 W/m2 of solar irradiation is going? A simple question but I like to see what would be this place which can receive so much energy without warming continuously.

Yes, and by the way, the infrared radiation emitted by the atmosphere due to latent and sensible heating must come down to the surface together with the 155 W/m2 of GH gas and cloud absorption. Totally these fluxes are 270 W/m2 which is the magnitude of the GH effect. Together with shortwave absorption 75 W/m2 the result is 345 W/m2 of infrared radiation absorbed by the surface. Do you approve that this so-called reradiation exists or do you deny it?

Here is a link that shows that there is s a network with 59 stations hosted at the Alfred Wegener Institute (AWI) in Bremerhaven measuring the reradiation flux and confirming its magnitude, Germany since 1992. Link: https://www.research-collection.ethz.ch/bitstream/handle/20.500.11850/286337/essd-10-1491-2018.pdf?sequence=2&isAllowed=y

Antero,

I think my statements are fine if you read them through.

By surface, I mean below 2 meters altitude, very little IR emitted from below 2 meters makes it to space, it is absorbed by water vapor or other GHGs.

This is simply true, only around 10% of the IR (in the GHG window, mostly) emitted by the Earth’s surface makes it to space.

Most IR, in the CO2 and H2O frequencies, that makes it to space is from the cloud tops or higher in the atmosphere where there is little water vapor. Convection takes it to the clouds and then the water vapor condenses, the air above the clouds has little WV, so it is easier to emit to space from there.

The emission spectrum from the Earth shows this (see nearby). The emission from the Sahara is 320K (46 C) from the surface, 220k (-53C) from the upper atmosphere and 260 from water vapor in the cloud height (-13C). Other areas are shown for comparison.

Note: there are portions of the IR spectrum that are not absorbed by GHGs, these frequencies go straight to space.

Andy,

If the CO2 and H2O is saturated during the day by the sun’s IR then wouldn’t most of the Earth’s IR emission during the day make it through to space? The ability of the H2O and CO2 to absorb IR is not infinite.

This wouldn’t be the case during the night. But most of the CO2 absorbed IR at night probably thermalizes since the collision time is much shorter than the radiation time. This is why not much IR makes it to space.

Tim,

The emission spectrum, measured by satellite, suggests most of the IR in the CO2 spectrum and in the WV spectrum, comes from high in the atmosphere, both in the daytime and at night. Good I can post images, see the attached image. The smooth curves are temperatures, the data is the rough line. These are emission spectra from the Sahara, Antarctica, and the Mediterranean. The upper curve are surface emissions, these are parts of the spectrum that not absorbed by GHGs. CO2 emits mostly from the stratosphere and water from the upper troposphere.

Most of the IR measured by satellites come from high in the atmosphere where molecules are much more sparse so that thermalization is much less and radiation is higher.

These graphs don’t tell me *when* these measurements were made.

Those spectra are probably taken at night, during the day there is some interference from sunlight:

https://web.archive.org/web/20180109022617/https://what-when-how.com/space-science-and-technology/weather-satellites/

True.

Andy and Jim,

I have personally carried out tens of spectral calculations of what happens in the atmosphere. The very first experience is that if you take the emission source of the surface out of the calculation, there is practically no radiation into space. The atmosphere receives its energy originating from the Sun from where it arrives at the atmosphere (75 W/m2) and the surface (165 W/m2). It comes in four different sources: absorption of SW and LW radiation, latent and sensible heat. The LW absorption is very rapid: 90 % is ready at 1 km altitude and 95 % at 2 km altitude.

The original source of latent and sensible heat is from the sun. The GH effect radiation 270 W/m2 just recycles between the surface and the atmosphere.

Andy did not answer if he approves that the surface radiates 395 W/m2. About 85 W/m2 of this LW radiation transmits into space without any absorption through the so-called atmospheric window. 85 is about 20 % of 395 W/m2. The cloud tops are not needed for emitting LW radiation into space. You should remember that about one day of three days is cloudless: cloud fraction is about 67 %. In clear sky conditions, OLR is about 270 W/m2 and in cloudy sky conditions, the OLR is much less: about 228 W/m2.

Many people seem to think that it is essential which molecules are emitting radiation into space. It has no meaning. If there were no atmosphere, the 340 W/m2 would be emitted into space anyway.

Andy did not answer if he approves the existence of the GH effect or not. Why I am repeating this simple question is that I have noticed that WUWT seems to give publicity to ideas of denying the GH effect or at least questioning the existence of reradiation. How is it Andy? You did not answer if you approve the existence of reradiation 345 W/m2.

If solar insolation is 240 W/m2 then how does it radiate 395W/m2? Earth is not a heat generator and neither is the atmosphere. The earth can’t radiate more than it gets.

“The GH effect radiation 270 W/m2 just recycles between the surface and the atmosphere.”

It doesn’t recycle. It damps out. The atmosphere is a lossy substance. Sooner or later that reflection from the atmosphere damps to zero because of the loss.

As the earth radiates towards the atmosphere it cools and radiates less. The atmosphere sends “some” of that back, not all, just some. So the earth heats back up a little but not back to where it started. So then the earth radiates that smaller amount back out and cools off again. The atmosphere then radiates an even smaller amount back toward the earth and on and on and on – headed for zero.

If the surface gets 165 W/m2 and radiates away 165 W/m2 and then gets back 100 W/m2 from the atmosphere it doesn’t add up to 165 W/m2 + 100 W/m2. The earth has already radiated away that first 165 W/m2 so it’s 0 W/m2 + 100 W/m2. So then the earth radiates away that 100 W/m2 and gets back 60 W/m2. The earth radiates that away and gets back 36 W/m2 and on and on ….

Antero, I will let you and Tim debate the energy budget. I’ve not been able to make sense of any of the energy budgets I’ve seen, they all look wrong to me.

The Earth has an outgoing energy spectrum that we can analyze. We also know roughly what the input spectrum is like.

We also know that most of the energy absorbed by the surface is carried away by convection, this is why the lower troposphere has a negative lapse rate. Radiative equilibrium is reached higher in the atmosphere at the tropopause. That is where there is as much radiation being sent to space as arriving from the Sun. It is well above the top of significant water vapor and above cloud height. Clouds or not, there is a cloud height, it is the point were water vapor begins to condense out or freeze.

The various energy budgets depend a great deal on where things happen, in the real world, where stuff happens changes minute-by-minute. A budget is an artificial snapshot of a local atmosphere and not much help.

For some reason images will not post, But, trust me, some radiation is emitted from the surface, especially in dry areas, like the Sahara, but most is emitted above cloud height.

The most of LW radiation emitted by the surface has been emitted and reradiated many times. Because of this one can claim that it is the atmosphere that is emitting radiation into space. But anyway the original source of this radiation comes from the surface despite these multiple absorption/emission events. A simple fact is that most of the energy in the atmosphere comes from the surface and only a small portion – 75 W/m2 is originating directly from the sun.

Very true and very important.

I have yet to get an answer as to what there is on the earth that absorbs radiation at 14.97 microns and radiates at 14.97 microns. It isn’t quartz or silica, two of the most common materials on earth.

If we can’t identify exactly what substance on earth is radiating at 14.97 microns then how do we establish an energy budget for the earth?

AO –> “Incoming solar insolation is 240 W/m2 and OLR is the same as shown by NASA’s CERES satellites.”

The sun is the only source of energy in the system. As you say, if it is 240 W/m2, then that is all that is available for the atmosphere and open sky to absorb from the earth. Yet the references you are using arrive at 395 W/m2 being radiated by the earth. If energy balance is to be maintained, that simply isn’t possible.

GHG’s can only re-emit radiation that has already been emitted by the earth and the earth cooled when it did so. Any “back radiation” is only going to, at best, raise the temp to what it was, and simply can’t raise it higher. It is not “additional” energy in the system. Where does the extra energy come from? The heat capacity of the earth, water and soil. Think of a torch at 1500 deg heating a cold block of iron. You apply the torch for a minute and take it off for a minute. The equilibrium temperature of the block of iron will raise, but it also cools during the “off” time. While cooling, the block begins radiates at the equilibrium temperature because of its heat capacity, i.e., the ability to hold heat. The earth is no different. It will continue to radiate as it cools.

Lastly, you seem to make the same mistake as many people do. Radiation is not by bullets (photons). The earth doesn’t fire a bullet and have it ricochet back. Atoms and molecules radiate spherical EM waves, like an expanding balloon. The result is that if GHG’s radiate 155 W/m2 downward, they must also radiate 155 W/m2 upward for a total of 310 W/m2 from GHG’s alone.

Thanks Jim,

The basic physics is where I get lost. Energy is not a particle, yet most of these discussions treat it like one. Drives me crazy.

Antero, with regard to your energy budget, I have no comment. The whole “energy budget” concept makes absolutely no sense to me. With regard to the so-called “greenhouse effect” I have written this previously.

https://andymaypetrophysicist.com/the-greenhouse-effect/

The various “energy budgets” in the literature do not capture the complexity of the actual greenhouse effect. They are over-simplifications and misleading, in my opinion. I generally ignore them.

Andy and Tim,

I notice that I am right; both of you do not approve of the existence of the reradiation. There is no reason to continue the discussion. If you do not approve of real physical observations, so you have your own physics. Net pages are full of skeptical people who do not approve of the GH effect and reradiation.

For me, it is pretty strange that the most popular net page of contrarians is on the black side of science. Even Dr. Spencer could not understand the GH effect and therefore he accused me that I have claimed that the energy balance violates physical laws. I have never written something like that. I wrote univocally that the IPCC’s GH effect definition violates the physical laws.

Antero,

Neither of us has said re-radiation doesn’t exist. Don’t put words in our mouth.

What *I* have said is that re-radiation can’t raise the earth’s temperature back to where it was before it first emitted the radiation. It’s a lossy system that winds up damping out. If the earth emits 1 unit of radiation and thus cools by 1 unit then re-radiation by the atmosphere can’t raise the earth’s energy by 1 unit. Some of the 1 unit from earth gets through to space and some gets thermalized via collisions with other molecules in the atmosphere.

The energy balance you claim requires the atmosphere to be a heat source. It isn’t. It’s just that simple.

Adding the atmosphere’s re-radiation to the sun’s radiation and saying that is the total impinging on the earth is just wrong, just plain wrong. That re-radiation *came* from the earth, the earth already lost it. It’s a net negative energy transfer as far as the earth is concerned.

What the atmosphere does do is slow the amount of IR lost to space or latent heat. Thus it raises MINIMUM temperatures, not maximum temperatures. Something the CGM’s just can’t see to get right.

Andy,

Thanks for clearing up the matter of ECS as an input to GCMs. If fact, this is still often asserted, but grates with people who know about them. Apart from going contrary to the purpose of modelling to find ECS, it just shows a wrong idea of how GCMs work. There is nowhere you could input an ECS.

“We never averaged runs from different models, it makes no sense. They are incompatible. I still think choosing one model is the “best practice.” I’ve not seen an explanation for why the CMIP5 produces an “ensemble mean.” It seems to be an admission that they have no idea what is going on, if they did they would choose the best model.”

I think you should look more carefully at what the IPCC really says. You have quoted them, not averaging different runs, but averaging some summary statistics like ECS. In your plot it is John Christy who drew the red line for the model mean. I think the IPCC is more careful there. They may occasionally mark a model median, but I think you need to quote where they do “average runs”.

I assume you are referring to the “ensemble mean” when you say “average runs.”

To a modeller, as I say clearly in the post, averaging multiple runs, from one model, is fine, we do it all the time.

The error I think CMIP5 is making, is averaging output from multiple models, as they did in their Table 9.5 on page 818. This is malpractice. So is creating the “ensemble mean.” An ensemble mean is also malpractice.

“average runs” from one model is fine, although it is better to use multiple runs to compute max/min/most likely, usually max and min are 90% or 95%.

They use model means to compute the anthropogenic effect on climate. See Figure 10.1 in AR5 on page 879. In the caption it reads:

Arguably, this is the most significant use of the so-called “ensemble mean” in AR5, but they use this mean in other parts of the report also.

Nick one additional point. The ECS quoted in Table 9.5, page 818, is composed of values, including ECS, that are computed from model output. My point is that averaging these values makes no sense, the models are too different from one another as Figure 2 makes clear. The curves are out of phase, that alone makes averaging them wrong. Thus, averaging the ECS from the models is also wrong.

They need to choose one model and then experiment with different runs, then they have a chance at estimating natural variability. Getting a handle on natural variability is key to convincing people they know what the impact of CO2 and other greenhouse gases is.

They will never know what natural variability is, if they average output from multiple models [or an “ensemble” of models], that are incompatible with one another. The various models will cancel each other out and “natural” variability will always be zero.

One example: if a model has a downward trajectory anytime there is an El Niño peak, then it is wrong and can be disregarded because it can’t calculate reality.

<blockquote>When I was a computer modeler, we would choose one model that appeared to be the best and average multiple runs from just that model. We never averaged runs from different models, it makes no sense. They are incompatible. I still think choosing one model is the “best practice.” I’ve not seen an explanation for why the CMIP5 produces an “ensemble mean.” It seems to be an admission that they have no idea what is going on, if they did they would choose the best model. I suspect it is a political solution for a scientific problem.</blockquote>

Yes, I too have never understood this or obtained any logical explanation from anyone of why they do this. I agree, it makes no sense.

If they are going to do it there should at least be some explanation of the logical justification. And yes, pick the best one as verified against observations, and use it. Why would you not? If this were medicine or engineering you would never think of using their procedure.

Michel. You are right. Combining different models does not make things better.

Averaging garbage just produces average garbage.

The Russian model INM-CM4 runs at a much lower level than other GCMs. For me, its trend is not convincing. What is the cause of a very deep temperature decrease in 2005? It was the time of pause. And what has been the cause of the strong temperature increase rather 2015? If the reasons are super El Nino and the strong SW radiation anomaly, then it is okay. The question is, how the modelers would have known these phenomena forehand, or has the model operated using the real deviations of the climate?

Antero, the things you mention should be investigated, for sure. Throw away all the other models, they are completely different from the observations to date. Then give all the modelers copies of INM-CM4, they can each have a problem to investigate with the model. They can make multiple runs, tuning the parameters that affect their problem and discover why. That is how we did it.

You know, facts don’t really count in the modern world. Proven since the 1970’s regarding “climate”.

And every nation will have a “carbon tax” — as a fact.

Andy, great work. You and WUWT may want to start a Page that identifies Stations with a long-term record that show no defined up-trend in temperatures.

Here is my first effort. The question needs to be asked. Why are so many stations not showing warming even though CO2 has increased from 300 to 415 PPM? Do the laws of physics cease to exist at these locations? BTW, the screen was for weather stations back to 1900. There are only 1121 stations. I’m up to 175 and counting, so over 15% of stations show no warming. It would be nice to get WUWT to recruit others to search for stations that show no warming trend.