Mar 09 2021

Reading Attraction in the Brain

I have been tracking the research in brain-machine interface (BMI), specifically with an eye towards studies that claim to interpret brain data. Typically I find that such studies are overhyped, at least in the press release and subsequent reporting. The question I always ask myself is – what exactly are they measuring and interpreting? A new study, using BMI and a form of AI called Generative adversarial neural networks (GANs), claims to read brain data to determine what faces subjects find attractive. What are the researchers doing, and what are they not doing?

I have been tracking the research in brain-machine interface (BMI), specifically with an eye towards studies that claim to interpret brain data. Typically I find that such studies are overhyped, at least in the press release and subsequent reporting. The question I always ask myself is – what exactly are they measuring and interpreting? A new study, using BMI and a form of AI called Generative adversarial neural networks (GANs), claims to read brain data to determine what faces subjects find attractive. What are the researchers doing, and what are they not doing?

The ultimate goal of BMI research (or at least one goal) is to figure out how to interpret brain activity so well that it is essentially mind-reading. For example, you might think of the word “cromulent” and a machine reading the resulting brain activity will be able to interpret it so well that it can generate the word “cromulent”. This would make possible a fully functional digitial-neural interface, like in The Matrix. To be clear – we are no where near this goal.

We have picked some of the low-hanging fruit, which are those areas of the brain that function through some form of somatotopic mapping. Vision is the most obvious example – if you are looking at the letter “F”, neurons in the visual cortex in the literal shape of an “F” will become active. Visual processing is much more complex than this, but at some level there is this bitmap level of representation. The motor and sensory parts of the brain also follow somatotopic mapping (the so-called homonculus for each). There is likely also a map for auditory processing, but more complex and we don’t fully understand it.

The big question is – what are the conceptual maps? Physical maps representing space, images, even sound frequencies, are easy to understand. What are the neural map for words, feelings, or abstract concepts? Related to this is the concept of embodied cognition – that our reasoning derives ultimately from our understanding of the physical world. We use physical metaphors to represent abstract concepts. For example, an argument can be “strong” or “weak”, your boss is hierarchically “above” you, you may have “gone too far” with a wild idea that is a “stretch”. This may just be how languages evolved, but the idea of embodied cognition is that the language represents something deeper about how our brains work. Perhaps even abstract concepts are physically mapped in the brain, anchoring even our abstract thoughts to a physical reality. Perhaps embodied cognition is not absolute, but more of a bridge between the physical and the abstract, or a scaffold on which fully abstract ideas can be cortically mapped. We are a long way from sorting all this out.

So now we have a study that, on some level, is claiming to read physical attractiveness in brain activity. My immediate reaction to this claim was that it probably was not doing that, but rather was relying on a more accessible marker for attractiveness. After reading the study, I think that is exactly what is happening.

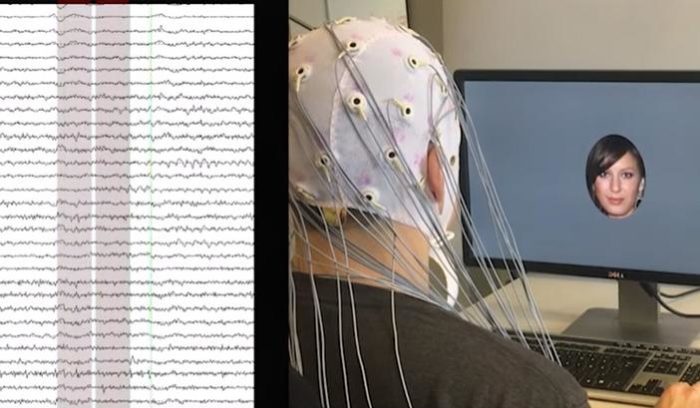

The authors recorded detailed EEG data from subjects while they looked at faces that were generated by a computer algorithm. They were instructed to focus on the faces. The GAN was then used to determine if the subject was mentally swiping right or left – they looked at their “reaction” to the face to determine if they found them attractive. From multiple trials the GAN then built up the parameters of what each subject found attractive. In a later validation test the GAN was able to predict with 80% accuracy which faces the subjects would find attractive, and actually built faces from the data that were designed to be attractive.

There is a subtle (perhaps) but very important distinction I feel needs to be made here. The BMI in this study is not reading from a person’s brain what facial features they find attractive. It is reading their reaction to seeing specific faces, and determining if it is positive or negative. This procedure would have worked without the BMI if the subjects literally just swiped right or left on the faces they were shown. So what does the BMI add?

The authors claim, although the study did not specifically look at this, that by using a BMI the GAN is fed more authentic data. If you simply ask subjects to swipe right or left, they are making a conscious decision, and that decision may be contaminated by social expectation and other factors. By using their EEG reaction it is hoped that we are getting the subject’s implicit even subconscious reaction, filtering out any deception.

But again, that claim was not explicitly controlled for in this study, but would be a good target for a follow up study. The authors could, for example, compare the outcome with BMI vs making a conscious selection. If the results are identical, then using the BMI offers no advantage and is just a complicated way of accomplishing something simple. If there is a difference, then we need to figure out what those difference are.

To some degree, however, the study is a proof of concept. This may not be the best way to get at the objective – determining which faces subjects found most attractive. But it does demonstrate that a BMI can be used to accurately determine someone’s mental reaction to seeing an attractive face, and I think that is the real target of the study – the reaction. This is a legitimate incremental result, pushing our understanding of brain function and BMI one more baby step. I fear, though, that it may be misinterpreted in the media and the public as a BMI reading from a subject’s brain what face they find attractive, and much more abstract and complex feat (by orders of magnitude) than just reading a binary reaction in the brain.

Make no mistake – BMI research is exciting and the current results are potentially very powerful. But hype tends to get a few decades ahead of reality, which then leads to disappointment in the public and the sense that it’s all a scam. Where are all those stem cell treatments we were promised 20 years ago? So it’s important, especially for journalists and science communicators, to understand where we are and how far we have to go.