Met police admits it lacks records of King's Cross face matches

- Published

London's Metropolitan Police Service says it does not have any records of the outcomes of a facial recognition tie-up with a private firm in the city.

Last month, it acknowledged it had shared people's pictures with the managers of the city's King's Cross Estate development.

It had previously denied the alliance.

In a new report, the Met added that it had only shared seven images and did not believe there had been similar arrangements with other private bodies.

It said the pictures were of "persons who had been arrested and charged/cautioned/reprimanded or given a formal warning" and had been provided by Camden Borough Police. The aim, it added, had been to "prevent crime, to protect vulnerable members of the community or to support the safety strategy".

But it admitted that it had no record of whether the estate manager's surveillance camera system had ever made facial matches of those involved, nor whether any police action had been taken as a result.

"The findings of this report need to be caveated by noting the limitations of technology which was not designed to be audited in this way, and the limitations of corporate memory," it explained.

It also confirmed that the facial recognition system in use at the estate was that of the Japanese firm NEC - something the management firm, Argent, had repeatedly declined to divulge itself.

NEC's systems have also been deployed by the Met as well as South Wales Police in their own trials of live facial recognition.

The Met also confirmed that the image-sharing arrangement had lasted between May 2016 and March 2018, and added that a new agreement had been put in place at the start of this year. However, it said no images had been shared under the new tie-up.

Argent had previously said it intended to launch a more advanced facial recognition system at the property but had yet to do so. It has since ditched the proposal.

The Met has again apologised for misinforming the mayor and members of London's Assembly about its involvement and blamed the mistake on the agreement having been struck at a "borough level".

London's deputy mayor for policing and crime, Sophie Linden, added that she had been informed that the police service had written to all the city's basic command units to make it "clear that there should be no local level agreements on the use of live facial recognition".

In a statement given to the BBC she added: "The Mayor and I are committed to holding the Met to account on its use of facial recognition technology and that's why the [Met's] commissioner agrees with us that there will be no further deployment anywhere in London until all of the conditions set out in the London Policing Ethics Panel report have been addressed."

Use of the tech was frozen earlier in the year before details of the King's Cross partnership emerged.

British Transport Police had previously confirmed it too had shared images with Argent for use in its facial recognition system.

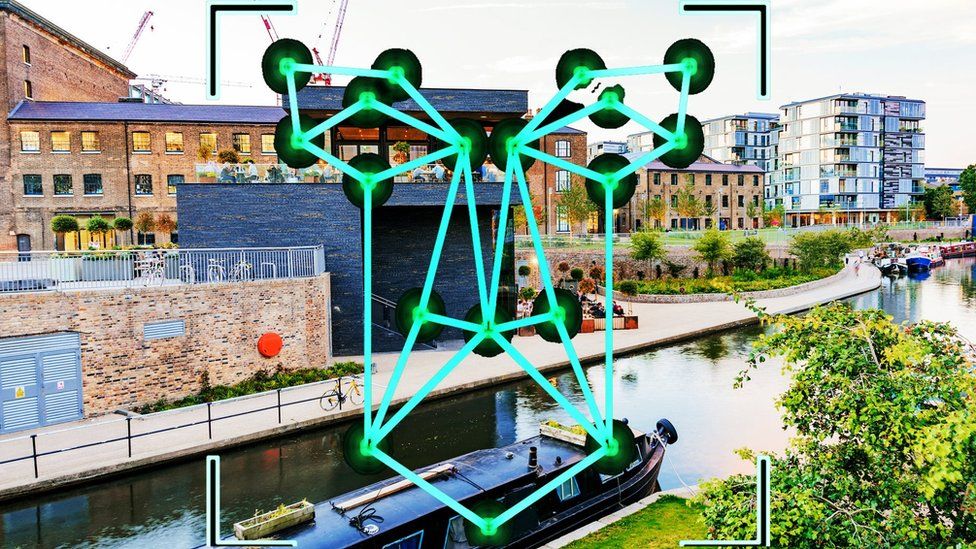

Privacy campaigners have raised concerns about the affair because it had not been apparent to the public that facial recognition scans were in use in what is a popular open-air site, home to shops, offices, education and leisure facilities.

Moreover, any formal tie-up between the police and an independent organisation concerning the use of facial recognition is supposed to be flagged to a surveillance camera commissioner. The watchdog previously blocked another similar arrangement involving police in Manchester and a local shopping centre.

In a related development, Argent has revealed further details of the scheme to Big Brother Watch after the privacy campaign group submitted a data subject access request.

Argent said it had:

- retained data from its facial recognition checks for a maximum of 30 days if there was a match, and instantly discarded information in relation to other scans

- used at least two security officers, one of whom was often a police employee, to confirm the matches

- used the Ministry of Justice's Criminal Justice Secure email service to transfer data to the two police forces involved

"The fact that police initially denied involvement and have few records about it shows how out of control facial recognition use is in this country," said Big Brother Watch's director Silkie Carlo.

The surveillance camera commissioner for England and Wales, who has also been looking into the matter, said he believed the case highlighted the need for the government to refresh a code of practice intended to give the public confidence in the use of the technology.

"The concern I've got about private organisations working with the police, is the lack of oversight," commented Tony Porter.

"Who is providing oversight to the watch list? Who's on the watch list? What's the standard of equipment as being used? And how do we know it's any good?

"Now, as a regulator, I cannot tell you the answer to those questions, because there are no standards to support it. And that in itself is a wrong position."

London Assembly member Sian Berry - who is co-leader of England and Wales' Green Party added that the Mets report "raises more questions than it answers".

"They have now admitted they also signed a new data-sharing agreement in January, claiming that facial recognition was included by mistake, but I want to know what else was being shared and why this was allowed to happen so recently, with so much concern about facial recognition amongst the public at this time," she said.

- Published6 September 2019

- Published3 September 2019