For the last two years, Dr. Timnit Gebru ’08 M.S. ’10 Ph.D. ’15 served as the technical co-lead of Google’s Ethical Artificial Intelligence team. This team is an important part of Google, a company that has struggled with racial biases in its AI-powered search engines used by over a billion people. Dr. Gebru, a Black woman, also prominently advocated for underrepresented employees at Google, where only 3.7% of the workforce is Black. Most recently, she co-authored a paper highlighting risks from using large amounts of text data to train AI systems, such as the one that underpins Google’s search engine. But when a Google executive asked her to either retract the paper or remove her name from it, Dr. Gebru refused and was subsequently fired.

We stand in solidarity with Dr. Gebru alongside over 2,000 Google employees and 3,000 members of academia, industry, and civil society who have signed an open letter defending Dr. Gebru and calling on Google to increase transparency and integrity in its research. These signatories include distinguished Stanford scholars who study issues related to AI, such as Dr. Dan Jurafsky, a computer science professor and MacArthur Fellow, and Marietje Schaake, the international policy director at the Cyber Policy Center.

In the coming days, we hope to see additional support for Dr. Gebru from Stanford’s most prominent voices on the ethical, societal and technical aspects of AI.

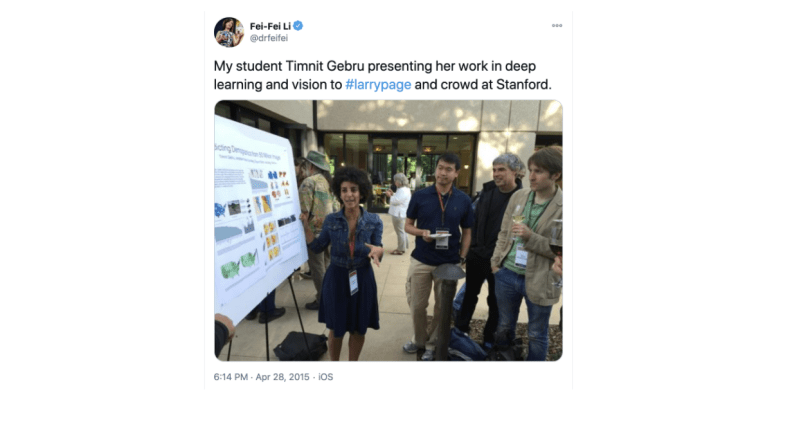

Dr. Gebru was not only one of Google’s preeminent AI researchers, but is also one of Stanford’s most prominent graduates trying to improve the ethical and societal effects of AI systems. She earned three degrees in electrical engineering from Stanford University, including a doctorate supervised by Dr. Fei-Fei Li. While at Stanford, she worked on a seminal paper that illustrated large racial and gender biases in AI-powered facial recognition systems, eventually leading to a significant reduction in facial recognition offerings by Amazon, Microsoft, and IBM. A respected leader in her field, Dr. Gebru’s work has played a significant role in raising awareness about the flaws in AI systems at large — an issue that Stanford has devoted significant resources to tackling through new initiatives like the Institute for Human-Centered Artificial Intelligence and the Ethics, Society and Technology Hub, as well as courses such as CS 182: Ethics, Public Policy, and Technological Change.

As the co-presidents of the Stanford Public Interest Technology Lab — which is funded by the Ethics, Society, and Technology Hub — we advocate for the thoughtful development of technology. This includes initiatives that grapple with the ethical and societal implications of AI and confront the racial inequities perpetuated by new technologies. While we write this piece in our personal capacities, our opinions are shaped by experiences that exist, in part, because Dr. Gebru demonstrated the need for this work and Stanford prioritized funding for it.

Working on AI ethics requires engaging with ethics itself, which involves discerning right from wrong. Stanford has a stated commitment to intellectual honesty and integrity; however, one of its collaborators, Google, violated those principles in firing Dr. Gebru. Stanford’s computer science department, the Institute for Human-Centered Artificial Intelligence and Dr. Fei-Fei Li’s AI4ALL have called for increased diversity in tech, yet one of their financial supporters has created a culture that makes Black scholars feel “constantly dehumanized.” This discrepancy between the values of Stanford and the actions of its close partner, Google, is what concerns us — especially as Stanford strives to embed ethics into AI systems.

We write this piece out of our respect for Stanford, not in spite of it. We believe Stanford is a force for good when it lives up to its values. Stanford has pushed us to become more thoughtful and engaged citizens, and we hope to hold the institution to the same fundamental standard.

Standing in solidarity with Dr. Gebru sends a clear, resounding signal of our values. Publicizing this position is how we tell students — particularly students underrepresented in tech — that we have their back when they expose flaws in AI systems. It’s how we ask alumni to fight for “ethical technology” even when what’s “ethical” conflicts with Google’s bottom line. And it’s how we tell Big Tech that academia can be an independent force which holds leaders accountable for trampling scientific integrity and dismissing Black scholars.

Stanford has created many successful programs to engage with the ethics of AI, and we applaud this progress. These efforts have given us the opportunity to help steer AI development toward the public interest. The students who strive to be Stanford’s next generation of “ethical technologists” are now watching how Stanford’s leaders and institutions in AI react to Dr. Gebru’s firing.

We look forward to seeing statements of solidarity from Stanford’s AI leaders and institutions, followed by actions that defend the integrity of research and the dignity of underrepresented researchers.

Nik Marda ’21 M.S. ’21 and Constanza Hasselmann ’21 M.S. ’22 are co-presidents of the Stanford Public Interest Technology Lab

Contact Nik Marda at nmarda ‘at’ stanford.edu and Constanza Hasselmann at cbh21 ‘at’ stanford.edu