TensorFlow 2 review: Easier machine learning

Now more platform than toolkit, TensorFlow has made strides in everything from ease of use to distributed training and deployment

-

TensorFlow 2.0

The importance of machine learning and deep learning is no longer in doubt. After decades of promise, hype, and disappointment, both have led to practical applications. We haven’t gotten to the point where machine learning or deep learning applications are perfect, but many are very good indeed.

InfoWorld

InfoWorldOf all the excellent machine learning and deep learning frameworks available, TensorFlow is the most mature, has the most citations in research papers (even excluding citations from Google employees), and has the best story about use in production. It may not be the easiest framework to learn, but it’s much less intimidating than it was in 2016. TensorFlow underlies many Google services.

The TensorFlow 2.0 website describes the project as an “end-to-end open source machine learning platform.” The upshot is that TensorFlow has become a more comprehensive “ecosystem of tools, libraries, and community resources” that help researchers build and deploy AI-powered applications.

There are four major parts to TensorFlow 2.0:

- TensorFlow core, an open source library for developing and training machine learning models;

- TensorFlow.js, a JavaScript library for training and deploying models in the browser and on Node.js;

- TensorFlow Lite, a lightweight library for deploying models on mobile and embedded devices; and

- TensorFlow Extended, a platform for preparing data, training, validating, and deploying models in large production environments.

IDG

IDG

The TensorFlow 2.0 ecosystem includes support for Python, JavaScript, and Swift, along with deployment to the cloud, browsers, and edge devices. TensorBoard (visualization) and TensorFlow Hub (model library) are useful tools. TensorFlow Extended (TFX) supports an end-to-end production pipeline.

In the past, I reviewed TensorFlow r0.10 (2016) and TensorFlow 1.5 (2018). Over the years, TensorFlow has evolved from its beginnings as a machine learning and neural network library based on data flow graphs that had a high learning curve and a low-level API. TensorFlow 2.0 is no longer too difficult for mere mortals, and now features a high-level Keras API as well as options for running in JavaScript, deploying on mobile and embedded devices, and operating in large production environments.

TensorFlow’s competition includes Keras (possibly using other backends than TensorFlow), MXNet (with Gluon), PyTorch, Scikit-learn, and Spark MLlib. The last two are primarily machine learning frameworks, lacking the facilities for deep learning.

You don’t have to choose just one. It’s perfectly reasonable to use multiple frameworks in a single pipeline, for example to prepare data with Scikit-learn and train a model with TensorFlow.

TensorFlow core

TensorFlow 2.0 focuses on simplicity and ease of use, with updates like eager execution, intuitive higher-level APIs, and flexible model building on any platform. It’s worth examining the first two of these in more depth.

Eager execution

Eager execution means that TensorFlow code runs when it is defined, as opposed to adding nodes and edges to a graph to be run in a session later, which was TensorFlow’s original mode. For example, an early “Hello, World!” script for TensorFlow r0.10 looked like this:

$ python

...

>>> import tensorflow as tf

>>> hello = tf.constant('Hello, TensorFlow!')

>>> sess = tf.Session()

>>> print(sess.run(hello))

Hello, TensorFlow!

>>> a = tf.constant(10)

>>> b = tf.constant(32)

>>> print(sess.run(a + b))

42

>>> exit()

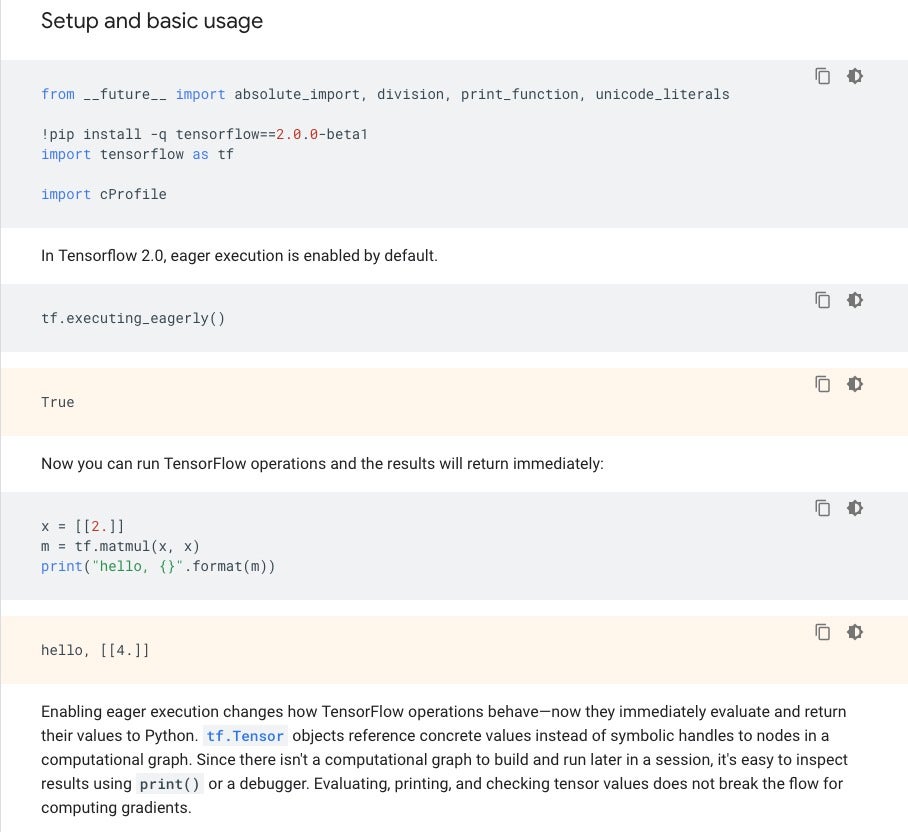

Note the use of tf.Session() and sess.run() here. In TensorFlow 2.0, eager execution mode is the default, as shown in the example below.

IDG

IDG

Eager execution in TensorFlow 2.0. This notebook can run in Google Colab or in a Jupyter Notebook running elsewhere with the correct prerequisites installed.

tf.keras

Both of the previous examples use the low-level TensorFlow API. The guidance for effective TensorFlow 2.0 is to use the high-level tf.keras APIs rather than the old low-level APIs; that will greatly reduce the amount of code you need to write. You can build Keras neural networks using one line of code per layer, or fewer if you take advantage of looping constructs. The example below demonstrates the Keras data set and Sequential model APIs, running in Google Colab, which is a convenient (and free) place to run TensorFlow samples and experiments. Note that Colab offers GPU and TPU instances as well as CPUs.

IDG

IDG

A TensorFlow notebook to train a basic deep neural network to classify MNIST handwritten digit images. This is a TensorFlow sample notebook running on Google Colab. Note the use of tf.keras.datasets to supply the MNIST images.

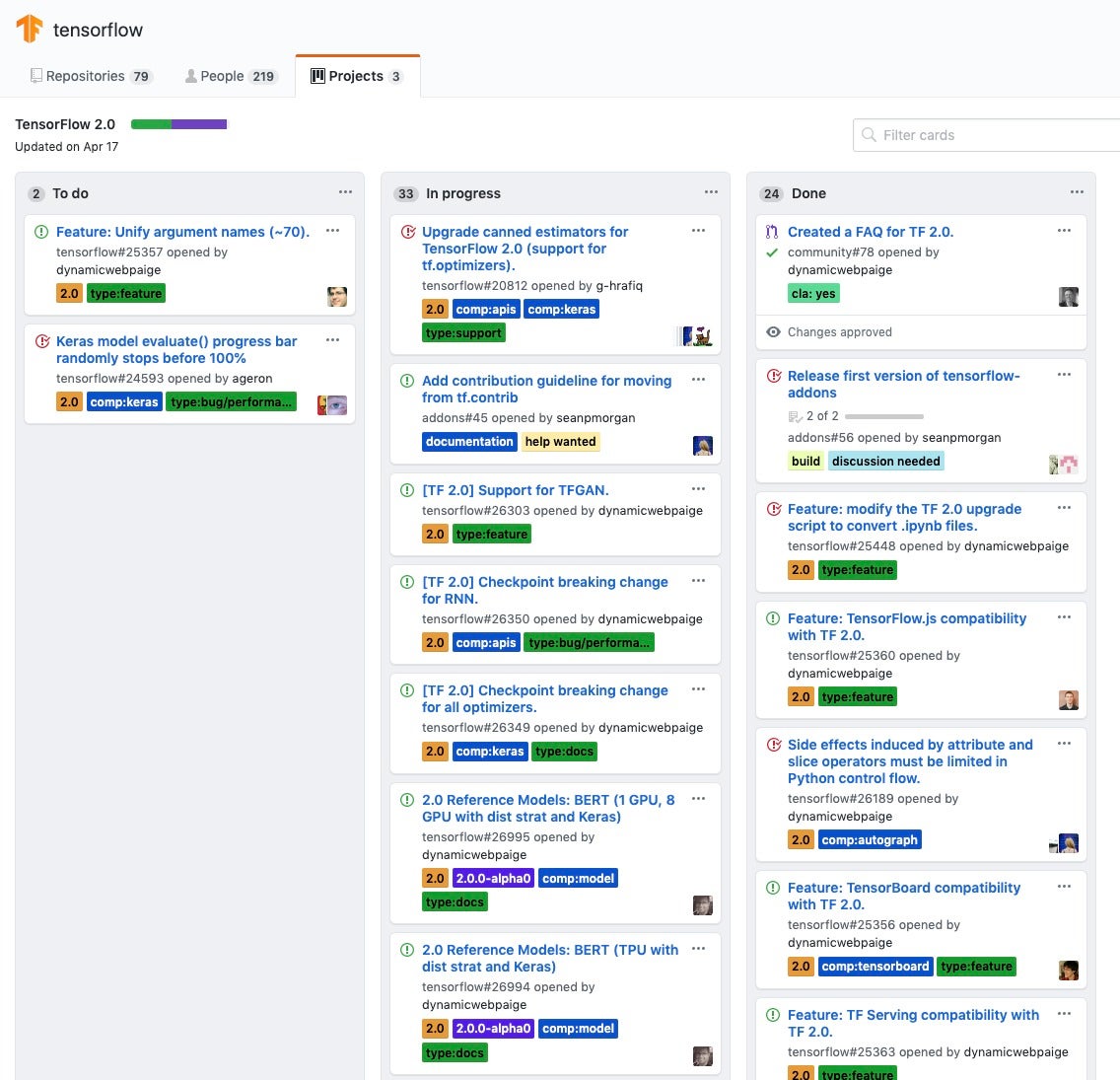

Transition to TensorFlow 2.0

As of this writing, the state of the transition from TensorFlow 1.14 to TensorFlow 2.0 is mixed, as shown in the screenshot below. There are currently two to-do items, 23 tasks in progress, and 34 completed tasks. The tasks in progress range from little progress at all (for example, when the assignee dropped out) to almost complete (the code works in the repository Master branch but hasn’t been reviewed and deployed).

IDG

IDG

The state of the transition from TensorFlow 1.14 to TensorFlow 2.0 is tracked at https://github.com/orgs/tensorflow/projects/4. This screenshot was taken on June 21, but note that the page hadn’t been updated since April 17.

Upgrading models to TensorFlow 2.0

As is often the case with full version releases of open source projects, TensorFlow 2.0 introduces a number of breaking changes to APIs that require upgrades to your code. Fortunately, there is a Python code upgrade script, installed automatically with TensorFlow 2.0, and there is also a compatibility module (compat.v1) for API symbols that can not be upgraded simply by using a string replacement. After running the upgrade script your program might run on TensorFlow 2.0, but there will be references to the tf.compat.v1 namespace that you will want to fix at your leisure, to keep the code clean. In addition, you can upgrade Jupyter notebooks on GitHub repos to TensorFlow 2.0.

Using tf.function

The downside of eager execution mode is that you may lose some of the speed of compiling and executing the flow graph. There’s a way to get that back without turning eager execution mode off entirely: tf.function.

Basically, when you annotate a function with @tf.function it will be compiled into a graph, and it and any functions it calls will get the benefits of (probably) faster execution, running on GPU or TPU, or exporting to SavedModel. A convenient new feature of tf.function is AutoGraph, which automatically compiles Python control flow statements into TensorFlow control operations.

Distributed training

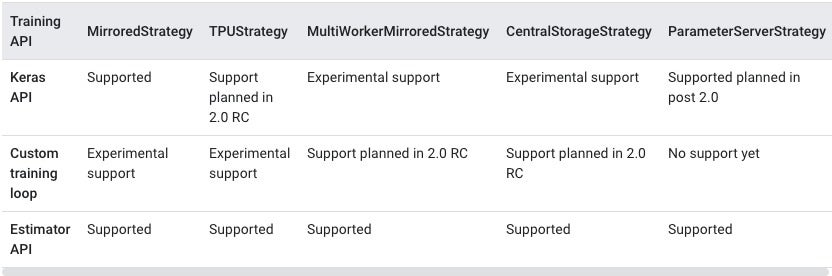

The last time I dove into TensorFlow, there were two ways to run distributed training: using an asynchronous parameter server, and using the third-party Horovod project, which is synchronous and uses an all-reduce algorithm. Now there are five native TensorFlow distribution strategies, and an API to select the one you want, tf.distribute.Strategy, which allows you to distribute training across multiple GPUs, multiple machines, or multiple TPUs. This API can also be used for distributing evaluation and prediction on different platforms.

IDG

IDG

TensorFlow now supports five native distribution strategies with various levels of support for the three training APIs for the TensorFlow 2.0 beta.

TensorFlow.js

TensorFlow.js is a library for developing and training machine learning models in JavaScript and deploying them in a browser or on Node.js. There’s also a high-level library built on top of TensorFlow.js, ml5.js, which hides the complexities of tensors and optimizers.

In the browser, TensorFlow.js supports mobile devices as well as desktop devices. If your browser supports WebGL shader APIs, TensorFlow.js can use them and take advantage of the GPU. That can give you up to 100x speed-up compared to the CPU back-end. The TensorFlow.js demos run surprisingly quickly in the browser on a machine with a GPU.

On Node.js, TensorFlow.js can use an installed version of TensorFlow as a back-end, or run the basic CPU back-end. The CPU back-end is pure JavaScript and not terribly parallelizable.

You can run official TensorFlow.js models, convert Python models, use transfer learning to customize models to your own data, and build and train models directly in JavaScript.

TensorFlow Lite

TensorFlow Lite is an open source deep learning framework for on-device inference. It currently builds models for iOS, ARM64, and Raspberry Pi.

The two main components of TensorFlow Lite are an interpreter and a converter. The interpreter runs specially optimized models on many different hardware types. The converter converts TensorFlow models into an efficient form for use by the interpreter, and can introduce optimizations to improve binary size and performance. There are pre-trained models for image classification, object detection, smart reply generation, pose estimation, and segmentation. There are also example apps for gesture recognition, image classification, object detection, and speech recognition.

TensorFlow Extended

TensorFlow Extended (TFX) is an end-to-end platform for deploying production machine learning pipelines. TFX is something to consider once you have trained a model. Pipelines include data validation, feature engineering, modeling, model evaluation, serving inference, and managing deployments to online, native mobile, and JavaScript targets. The diagram below shows how the components of the TFX pipeline fit together.

IDG

IDG

TensorFlow Extended block diagram.

Swift for TensorFlow

Swift for TensorFlow is a next-generation (and still unstable) platform for deep learning and differentiable programming. It has a high-level API for training that looks similar to Python TensorFlow, but it also has support for automatic differentiation built into a fork of the Swift compiler with the @differentiable attribute. Swift for TensorFlow can import and call Python code, which eases the transition from Python TensorFlow.

TensorFlow Tools

There are currently seven tools that support TensorFlow. They are TensorBoard, a set of visualization tools for TensorFlow graphs; TensorFlow Playground, an adjustable online neural network; CoLab, aka Colaboratory, a free online Jupyter Notebook environment; the What-If Tool, which helps to explore and debug models from TensorBoard, CoLab, or Jupyter Notebooks; ML Perf, a broad machine learning benchmark suite; XLA (accelerated linear algebra), a domain-specific compiler for linear algebra that optimizes TensorFlow computations; and TFRC (TensorFlow Research Cloud), a cluster of more than 1,000 Cloud TPUs that researchers can apply to use for free.

Overall, the TensorFlow 2.0 beta has come a long way in a number of directions. The core framework is easier to learn, use, and debug thanks to the tf.keras API and eager execution mode. You can selectively mark functions to be compiled into graphs. There are five ways to orchestrate distributed training and inference.

There is a complete set of components, TFX, for constructing machine learning pipelines, from data validation to inference model management. You can run TensorFlow.js in a browser or on Node.js. You can run TensorFlow Lite on mobile and embedded devices. And, finally, Swift for TensorFlow will open up new possibilities for model building.

—

Cost: Free open source.

Platform: Ubuntu 16.04 or later; Windows 7 or later; MacOS 10.12.6 (Sierra) or later (no GPU support); Raspbian 9.0 or later.

Copyright © 2019 IDG Communications, Inc.