‘It’s able to create knowledge itself’: Google unveils AI that learns on its own, needs no human teacher

The 2015 version of the AlphaGo programme famously beat a human Go grandmaster – but the new, self-taught AlphaGo Zero is so good it beat the old programme 100-0

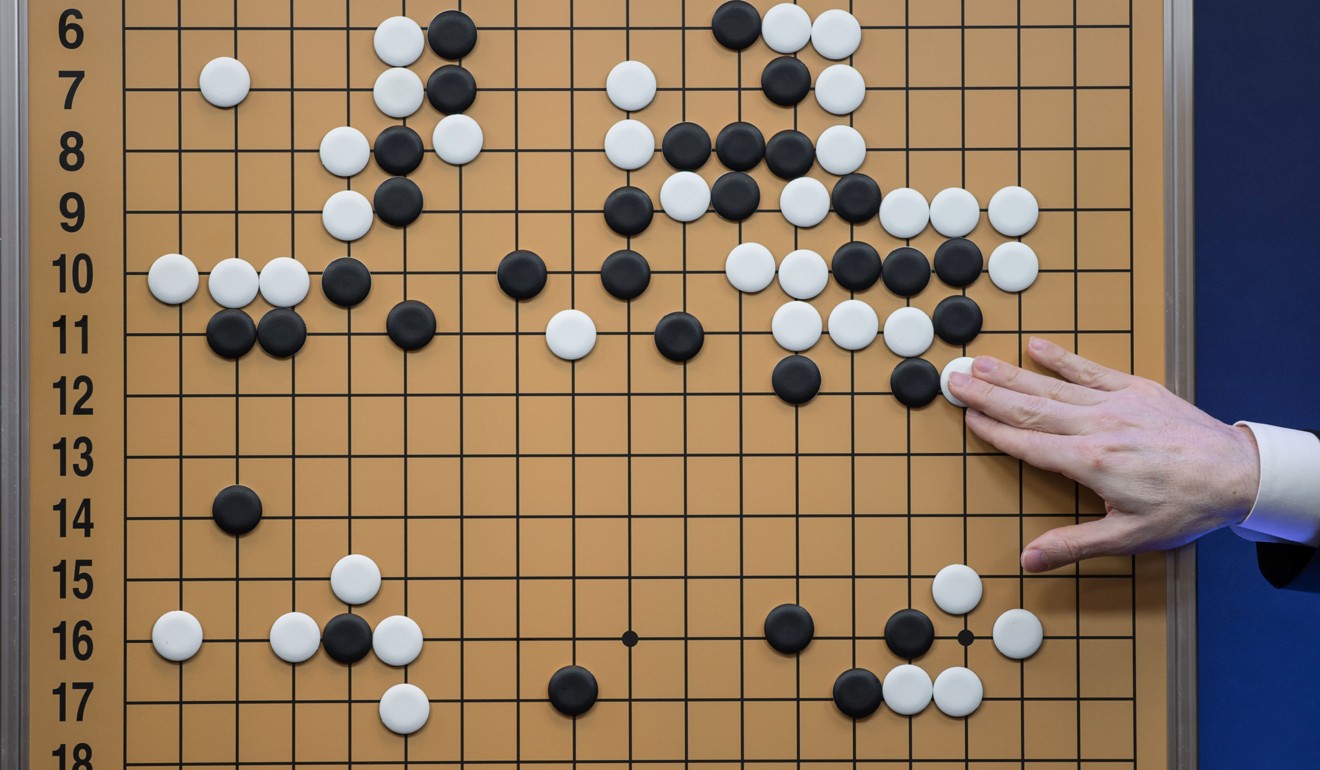

Google’s artificial intelligence group, DeepMind, has unveiled the latest incarnation of its Go-playing programme, AlphaGo – an AI so powerful that it derived thousands of years of human knowledge of the game before inventing better moves of its own, all in the space of three days.

Named AlphaGo Zero, the AI programme has been hailed as a major advance because it mastered the ancient Chinese board game from scratch, and with no human help beyond being told the rules. In games against the 2015 version – which famously beat Lee Sedol, the South Korean grandmaster – AlphaGo Zero won 100 to 0.

At DeepMind, which is based in London, AlphaGo Zero is working out how proteins fold, a massive scientific challenge that could give drug discovery a sorely needed shot in the arm.

“For us, AlphaGo wasn’t just about winning the game of Go,” said Demis Hassabis, CEO of DeepMind and a researcher on the team. “It was also a big step for us towards building these general-purpose algorithms.”

By not involving a human expert in its training, AlphaGo discovers better moves that surpass human intelligence

Most AIs are described as “narrow” because they perform only a single task, such as translating languages or recognising faces, but general-purpose AIs could potentially outperform humans at many different tasks. In the next decade, Hassabis believes that AlphaGo’s descendants will work alongside humans as scientific and medical experts.

Previous versions of AlphaGo learned their moves by training on thousands of games played by strong human amateurs and professionals. AlphaGo Zero had no such help. Instead, it learned purely by playing itself millions of times over. It began by placing stones on the Go board at random but swiftly improved as it discovered winning strategies.

“It’s more powerful than previous approaches because by not using human data, or human expertise in any fashion, we’ve removed the constraints of human knowledge and it is able to create knowledge itself,” said David Silver, AlphaGo’s lead researcher.

“It discovers some best plays, josekis, and then it goes beyond those plays and finds something even better,” said Hassabis. “You can see it rediscovering thousands of years of human knowledge.”

Eleni Vasilaki, professor of computational neuroscience at Sheffield University, said it was an impressive feat. “This may very well imply that by not involving a human expert in its training, AlphaGo discovers better moves that surpass human intelligence on this specific game,” she said. But she pointed out that, while computers are beating humans at games that involve complex calculations and precision, they are far from even matching humans at other tasks. “AI fails in tasks that are surprisingly easy for humans,” she said. “Just look at the performance of a humanoid robot in everyday tasks such as walking, running and kicking a ball.”

Tom Mitchell, a computer scientist at Carnegie Mellon University in Pittsburgh called AlphaGo Zero an “outstanding engineering accomplishment”. He added: “It closes the book on whether humans are ever going to catch up with computers at Go. I guess the answer is no. But it opens a new book, which is where computers teach humans how to play Go better than they used to.”

The idea was welcomed by Andy Okun, president of the American Go Association: “I don’t know if morale will suffer from computers being strong, but it actually may be kind of fun to explore the game with neural-network software, since it’s not winning by out-reading us, but by seeing patterns and shapes more deeply.”

While AlphaGo Zero is a step towards a general-purpose AI, it can only work on problems that can be perfectly simulated in a computer, making tasks such as driving a car out of the question. AIs that match humans at a huge range of tasks are still a long way off, Hassabis said. More realistic in the next decade is the use of AI to help humans discover new drugs and materials, and crack mysteries in particle physics. “I hope that these kinds of algorithms and future versions of AlphaGo-inspired things will be routinely working with us as scientific experts and medical experts on advancing the frontier of science and medicine,” Hassabis said.