The degree to which these companies have had success so far is because of a long-dormant form of computation that models certain aspects of the brain. However, this form of computation pushes current hardware to its limits, since modern computers operate very differently from the gray matter in our heads. So, as programmers create “neural network” software to run on regular computer chips, engineers are also designing “neuromorphic” hardware that can imitate the brain more efficiently. Sadly, one type of neural net that has become the standard in image recognition and other tasks, something called a convolutional neural net, or CNN, has resisted replication in neuromorphic hardware.

That is, until recently.

IBM scientists reported in the Proceedings of the National Academy of Sciences that they’ve adapted CNNs to run on their TrueNorth chip. Other research groups have also reported progress on the solution. The TrueNorth system matches the accuracy of the best current systems in image and voice recognition, but it uses a small fraction of the energy and operates at many times the speed. Finally, combining convolutional nets with neuromorphic chips could create more than just a jargony mouthful; it could lead to smarter cars and to cellphones that efficiently understand our verbal commands—even when we have our mouths full.

Getting tech to learn more like humans

Traditionally, programming a computer has required writing step-by-step instructions. Teaching the computer to recognize a dog, for instance, might involve listing a set of rules to guide its judgment. Check if it’s an animal. Check if it has four legs. Check if it’s bigger than a cat and smaller than a horse. Check if it barks. Etc. But good judgment requires flexibility. What if a computer encounters a tiny dog that doesn’t bark and has only three legs? Maybe you need more rules, then, but listing endless rules and repeating the process for each type of decision a computer has to make is inefficient and impractical.

Humans learn differently. A child can tell dogs and cats apart, walk upright, and speak fluently without being told a single rule about these tasks—we learn from experience. As such, computer scientists have traditionally aimed to capture some of that magic by modeling software on the brain.

The brain contains about 86 billion neurons, cells that can connect to thousands of other neurons through elaborate branches. A neuron receives signals from many other neurons, and when the stimulation reaches a certain threshold, it “fires,” sending its own signal to surrounding neurons. A brain learns, in part, by adjusting the strengths of the connections between neurons, called synapses. When a pattern of activity is repeated, through practice, for example, the contributing connections become stronger, and the lesson or skill is inscribed in the network.

In the 1940s, scientists began modeling neurons mathematically, and in the 1950s they began modeling networks of them with computers. Artificial neurons and synapses are much simple than those in a brain, but they operate by the same principles. Many simple units (“neurons”) connect to many others (via “synapses”), with their numerical values depending on the values of the units signaling to them weighted by the numerical strength of the connections.

Artificial neural networks (sometimes just called neural nets) usually consist of layers. Visually depicted, information or activation travels from one column of circles to the next via lines between them. The operation of networks with many such layers is called deep learning—both because they can learn more deeply and because the actual network is deeper. Neural nets are a form of machine learning, the process of computers adjusting their behavior based on experience. Today’s nets can drive cars, recognize faces, and translate languages. Such advances owe their success to improvements in computer speed, to the massive amount of training data now available online, and to tweaks in the basic neural net algorithms.

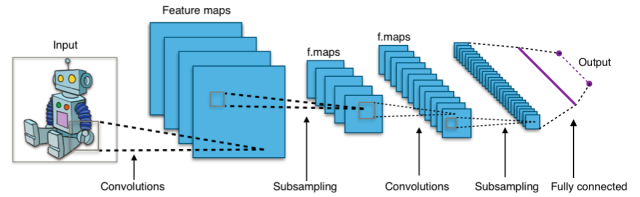

Convolutional neural nets are a particular type of network that has gained prominence in the last few years. CNNs extract important features from stimuli, typically pictures. An input might be a photo of a dog. This could be represented as a sheet-like layer of neurons, with the activation of each neuron representing a pixel in the image. In the next layer, each neuron will take input from a patch of the first layer and become active if it detects a particular pattern in that patch, acting as a kind of filter. In succeeding layers, neurons will look for patterns in the patterns, and so on. Along the hierarchy, the filters might be sensitive to things like edges of shapes, and then particular shapes, then paws, then dogs, until it tells you if it sees a dog or a toaster.

Critically, the internal filters don’t need to be programmed by hand to look for shapes or paws. You only need to present to the network inputs (pictures) and correct outputs (picture labels). When it gets it wrong, it slightly adjusts its connections until, after many, many pictures, the connections automatically become sensitive to useful features. This process resembles how the brain processes vision, from low-level details up through object recognition. Any information that can be represented spatially—two dimensions for photos, three for video, one for strings of words in a sentence, two for audio (time and frequency)—can be parsed and understood by CNNs, making them widely useful.

Although Yann LeCun—now Facebook’s director of AI research—first proposed CNNs in 1986, they didn’t reveal their strong potential until a few adjustments were made in how they operated. In 2012, Geoffrey Hinton—now a top AI expert at Google—and two of his graduate students used a CNN to win something called the ImageNet Challenge, a competition requiring computers to recognize scenes and objects. In fact, they won by such a large margin that CNNs took over, and since then every winner has been a CNN.

Now, mimicking the brain is computationally expensive. Given that human brain has billions of neurons and trillions of synapses, simulating every neuron and synapse is currently impossible. Even simulating a small piece of brain could require millions of computations for every piece of input.

So unfortunately, as noted above, convolutional neural nets require huge computing power. With many layers, and each layer applying the same feature filter repeatedly to many patches of the previous layer, today’s largest CNNs can have millions of neurons and billions of synapses. Running all of these little calculations does not suit classic computing architecture, which must process one instruction at a time. Instead, scientists have turned to parallel computing, which can process many instructions simultaneously.

Today’s advanced neural nets use graphical processing units (GPUs)—the kind used in video game consoles—because they specialize in the kinds of mathematical operations that happen to be useful for deep learning. (Updating all the geometric facets of a moving object at once is a problem similar to calculating all the outputs from a given neural net layer at once.) But still, the hardware was not designed to perform deep learning as efficiently as a brain, which can drive a car and simultaneously carry on a conversation about the future of autonomous vehicles, all while using fewer watts than a light bulb.

reader comments

57