-

An example of the SDR-to-HDR tech; the right side of the image has been HDRified.Bcom

-

The source SDR image.Bcom

-

The fully HDRified image. (Well, this is just an example; showing an actual 10-bit HDR image via an 8-bit JPEG on your 8-bit screen is hard.)Bcom

-

Bcom's SDR-to-HDR tech, running on an FPGA.Bcom

Researchers at the French research institute Bcom, with the aid of a wunderkind plucked from a nearby university, have developed software that converts existing SDR (standard dynamic range) video into HDR (high dynamic range) video. That is, the software can take almost all of the colour video content produced by humanity over the last 80 years and widen its dynamic range, increasing the brightness, contrast ratio, and number of colours displayed on-screen. I've seen the software in action and interrogated the algorithm, and I'm somewhat surprised to report how good the content looks with an expanded dynamic range.

But garbage in, garbage out, right? You can't magically create more detail (or more colour data) in an image. Well, you can—Google produced detailed face images from pixellated source images—but philosophically it is no longer the same image. When a film is cropped for TV broadcast, or you receive a blocky low-bitrate stream from Netflix, or Flickr changes the JPEG profile on an uploaded photo... are those the same image as the artist/director/videographer intended? Or are they different?

Does it even matter? If you're a broadcaster with a ton of archived SDR footage and millions of colour-thirsty potential customers who might pay for a special HDR channel, surely the only question is whether it's technically possible to convert SDR content to HDR, and whether that converted footage is subjectively enjoyable to viewers. Remaining objectively faithful to the original is just an added bonus.

-

Some examples of the five different lighting styles.Bcom

-

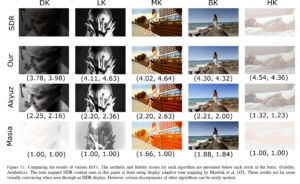

Some examples of different expansion operators (i.e. tone expansion algorithms). Top row is the source SDR image. Second row is Bcom's tech. Third and fourth rows are other methods that have been discussed/reviewed in the last couple of years.Bcom

-

Subjective evaluation of three different tone expansion operators by a bunch of human viewers.Bcom

Tone Expansion

Accurate colour reproduction via tone mapping and gamut mapping—altering the colours and brightness of an image so that they appear correctly on a certain type of display—is a mature and well-understood topic. Tone and gamut expansion, however—taking one shade of red, for example, and expanding it into multiple different shades—is a new topic with not much research behind it. What we do know, though, is that the human perception of both contrast and colourfulness appear to be closely connected with luminance—the Stevens and Hunt effects respectively.

Conveniently, this ties in with one of the main improvements of HDR displays: while conventional SDR/HDTV content (and thus SDR displays) maxes out at around 100 nits of luminance (which is very dim), HDR-certified displays tend to be in the 1,000+ nits range. So, that's where Bcom's SDR-to-HDR work begins: by amping up the luminance of SDR images.

The various tone expansion maps went through a few different rounds of testing with expert and non-expert human viewers to find the right luminance level. Too much luminance caused the HDR image to appear washed out compared to the SDR source, especially for dark-key and high-key material where there isn't much colour to begin with. But for most content, where there's a decent amount of colour, adding some luminance was the way to go.

The researchers also decided to apply a colour-correction filter after the tone expansion, which counteracts some of the desaturation that can be caused by increasing the luminance of the image. The filter causes slight changes in hue—a colour perceived as "light blue" might shift to "pastel blue"—but luminance, which "cannot be compromised," according to the researchers, is preserved.

The final mappings do a fine job maintaining the mood and artistic intent of the editor, director, and cinematographer—dark areas remain dark, light areas remain light (without blowing out). In my eyes-on experience, the results were good: the HDR images "popped," just as you'd expect. But I mostly looked at demo-reel type stuff of yachts crashing through sapphire waves and animals prowling through long grass; I would have liked to have seen something more representative of what might actually be broadcast on TV, like an episode of House or perhaps an old low-key movie.

But you need an FPGA...

Currently, Bcom's SDR-to-HDR tech runs in real time on a special FPGA-based device that broadcasters can purchase for a decent chunk of change, or in real time via PC software that leverages a beefy GPU.

The pseudo-HDR content is packaged for broadcast with HLG (hybrid log gamma) and PQ (perceptual quantiser). HLG, which was developed by the BBC and its Japanese public broadcasting counterpart NHK, is currently the best way of receiving HDR content via broadcast radio and cable TV signals. HLG consists of a second signal that is transmitted alongside the normal SDR signal: if the TV can decode both signals, you get HDR; if not, you just get the SDR feed. Hooray for backwards compatibility.

A competing standard, HDR10, uses a big old blob of metadata at the start of the file to describe how each frame should be rendered on an HDR display. HDR10 is perfect for video-on-demand services like Netflix or Amazon, but no good for TV broadcasts, where someone can tune in at any time and miss the metadata, or syndicated content, which might get chopped up into pieces or reordered. HDR10 is supported by more displays and has been adopted by more content providers, but HLG should gain some ground over the next couple of years as broadcasters hop on the HDR gravy train.

Cost-wise, using Bcom's FPGA gizmo (a few thousand pounds) or a PC with discrete graphics (~£1,000) is nothing compared to the ability to convert old SDR content into pseudo-HDR content that can capitalise on the consumer adoption of HDR-capable displays. Broadcasters will make a lot of money from HDR-only channels that have true HDR content padded out with updynamicked SDR-to-HDR content.

Bcom isn't stopping there, though. The next step is to optimise the algorithm so that it runs at a decent speed on CPUs (it currently takes between three and 10 minutes to convert one minute of SDR footage), and a cloud-based service is being worked on. Other possibilities, such as a real-time converter attached to an existing SDR camera or simply licensing out the IP, are also being considered.

So, there you have it: you can't create more detail—the laws of reality have been preserved—but you can make an image brighter, and thus perceptually more colourful and enjoyable to watch. Whether you will want to watch the HDR version of your favourite SDR film or TV show, that's another question entirely—one that'll probably be answered sooner rather than later.

Now read our in-depth HDR explainer...

Listing image by Bcom

reader comments

128