Building a data science project and training a model is only the first step. Getting that model to run in the production environment is where companies often fail.

Indeed, implementing a model into the existing data science and IT stack is very complex for many companies. The reason? A disconnect between the tools and techniques used in the design environment and the live production environment.

For over a year, we surveyed thousands of companies from all types of industries and data science advancement on how they managed to overcome these difficulties and analyzed the results. Here are the key things to keep in mind when you're working on your design-to-production pipeline.

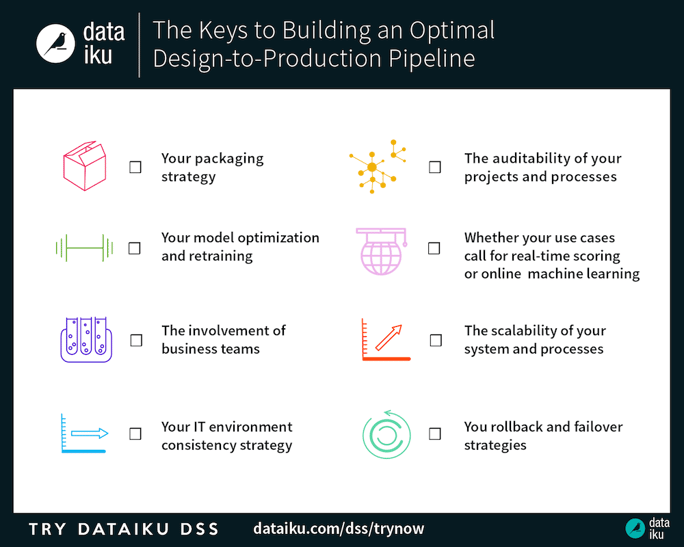

1. Your Packaging Strategy

A data project is a messy thing. It contains lots of data in loads of different formats stored in different places, and lines and lines (and lines!) of code, and scripts in different languages turning that raw data into predictions. Packaging all that together can be tricky if you do not support the proper packaging of code or data during production, especially when you’re working with predictions.

A typical release process should:

1. Have a versioning tool in place to control code versioning.

2. Create packaging scripts to package the code and data in a zip file.

3. Deploy into production.

2. Your Model Optimization and Retraining

Small iterations are key to accurate predictions in the long term, so it’s critical to have a process in place for retraining, validation, and deployment of models. Indeed, models need to constantly evolve to adjust to new behaviors and changes in the underlying data.

The key to efficient retraining is to set it up as a distinct step of the data science production workflow. In other words, an automatic command that retrains a predictive model candidate weekly, scores and validates this model, and swaps it after a simple verification by a human operator.

3. The Involvement of Your Business Teams

In our survey, we found a strong correlation between companies that reported facing many difficulties deploying into production and the limited involvement of business teams.

This is critical during the development of the project to ensure that the end product is understandable and usable by business users. Once the data product is in production, it remains an important success factor for business users to assess the performance of the model, since they base their work on it. There are several ways to do this; the most popular is setting up live dashboards to monitor and drill down into model performance. Automatic emails with key metrics can be a safer option to make sure business teams have the information at hand.

4. Your IT Environment Consistency Strategy

Modern data science relies on the use of several technologies such as Python, R, Scala, Spark, and Hadoop, along with open-source frameworks and libraries. This can cause an issue when production environments rely on technologies like JAVA, .NET, and SQL databases, which could require complete recoding of the project.

The multiplying of tools also poses problems when it comes to maintaining the production as well as the design environment with current versions and packages (a data science project can rely on up to 100 R packages, 40 for Python, and several hundred Java/Scala packages). To manage this, two popular solutions are to maintain a common package list or to set up virtual machine environments for each data project.

5. Your Rollback Strategy

With efficient monitoring in place, the next milestone is to have a rollback strategy in place to act on declining performance metrics. A rollback strategy is basically an insurance plan in case your production environment fails.

A good rollback strategy has to include all aspects of the data project, including the data, the data schemas, transformation code, and software dependencies. It also has to be a process accessible by users who aren’t necessarily trained data engineers to ensure reactivity in case of failure.

6. The Auditability of Your Projects and Processes

Being able to audit to know which version of each output corresponds to what code is critical. Tracing a data science workflow is important if you ever need to trace any wrongdoing, prove that there is no illegal data use or privacy infringement, avoid sensitive data leaks, or demonstrate quality and maintenance of your data flow.

The most common way to control versioning is (unsurprisingly) Git or SVN. However, keeping logs of information about your database systems (including table creation, modifications, and schema changes) is also a best practice.

7. Whether Your Use Cases Call for Real-Time Scoring or Online Learning

Real-time scoring and online learning are increasingly trendy for a lot of use cases including scoring fraud prediction or pricing. Many companies who do scoring use a combination of batch and real-time, or even just real-time scoring. And more and more companies report using online machine learning.

These technologies lead to complications in terms of production environment, rollback and failover strategies, deployment, etc. However, they don't necessitate setting up a distinct process and stack for these technologies, only monitoring adjustments.

8. The Scalability of Your System and Processes

As your data science systems scale with increasing volumes of data and data projects, maintaining performance is critical. This means setting up a system that’s elastic enough to handle significant transitions, not only in pure volume of data or request numbers, but also in complexity or team scalability.

But scalability issues can come unexpectedly from bins that aren’t emptied, massive log files, or unused datasets. They’re prevented by having a strategy in place to inspect workflows for inefficiencies or monitoring job execution time.