Facebook will allow users to livestream attempts to self-harm because it “doesn’t want to censor or punish people in distress who are attempting suicide”, according to leaked documents.

However, the footage will be removed “once there’s no longer an opportunity to help the person” – unless the incident is particularly newsworthy.

The policy was formulated on the advice of experts, the files say, and it reflects how the social media company is trying to deal with some of the most disturbing content on the site.

The Guardian has been told concern within Facebook about the way people are using the site has increased in the last six months.

For instance, moderators were recently told to “escalate” to senior managers any content related to 13 Reasons Why – a Netflix drama about the suicide of a high school student – because of fears it could inspire copycat behaviour.

Figures circulated to Facebook moderators appear to show that reports of potential self-harm on the site are rising. One document drafted last summer says moderators escalated 4,531 reports of self-harm in two weeks.

Sixty-three of these had to be dealt with by Facebook’s law enforcement response team – which liaises with police and other relevant authorities.

Figures for this year show 5,016 reports in one two-week period and 5,431 in another.

The Guardian's moderation policy

The Guardian's moderation approach is bound by guidelines, which we have published here, and our moderators are all directly employed by the Guardian and work within our editorial team. The moderation team regularly receives training on issues such as race, gender or religious issues, and applies that training in service of those public guidelines. When making decisions, our moderators consider the community standards, wider context and purpose of discussions, as well as their relationship to the article on which they appear. We post-moderate most discussions, and rely on a mixture of targeted reading, community reports and tools to identify comments that go against our standards. We have an appeals process and anyone wanting to discuss specific moderation decisions can email moderation@theguardian.com. When requested, reasons for removal may be shared with those affected by the decision.

All discussions on the Guardian site relate to articles we have published; this means we have specific responsibilities as a publisher, and also that we aim to take responsibility for the conversations we host. We make decisions about where to open and close comments based on topic, reader interest, resources and other factors.

The documents show how Facebook will try to contact agencies to trigger a “welfare check” when it seems someone is attempting, or about to attempt, suicide.

A policy update shared with moderators in recent months explained the shift in thinking.

It says: “We’re now seeing more video content – including suicides – shared on Facebook. We don’t want to censor or punish people in distress who are attempting suicide. Experts have told us what’s best for these people’s safety is to let them livestream as long as they are engaging with viewers.

“However, because of the contagion risk [ie some people who see suicide are more likely to consider suicide], what’s best for the safety of people watching these videos is for us to remove them once there’s no longer an opportunity to help the person. We also need to consider newsworthiness, and there may be particular moments or public events that are part of a broader public conversation that warrant leaving up.”

Moderators have been told to “now delete all videos depicting suicide unless they are newsworthy, even when these videos are shared by someone other than the victim to raise awareness”.

The documents also tell moderators to ignore suicide threats when the “intention is only expressed through hashtags or emoticons” or when the proposed method is unlikely to succeed.

Any threat to kill themselves more than five days in the future can also be ignored, the files say.

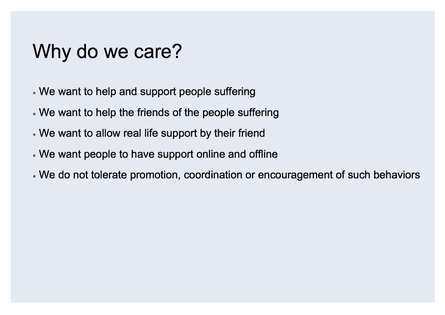

One of the documents says: “Removing self-harm content from the site may hinder users’ ability to get real-world help from their real-life communities.

“Users post self-destructive content as a cry for help, and removing it may prevent that cry for help from getting through. This is the principle we applied to suicidal posts over a year ago at the advice of Lifeline and Samaritans, and we now want to extend it to other content types on the platform.”

Monika Bickert, Facebook’s head of global policy management, defended leaving some suicide footage on the site. She said: “We occasionally see particular moments or public events that are part of a broader public conversation that warrant leaving this content on our platform.

“We work with publishers and other experts to help us understand what are those moments. For example, on 11 September 2001, bystanders shared videos of the people who jumped from the twin towers. Had those been livestreamed on Facebook that might have been a moment in which we would not have removed the content both during and after the broadcast. We more recently decided to allow a video depicting an Egyptian man who set himself on fire to protest rising government prices.”

She added: “In instances where someone posts about self-injury or suicide, we want to be sure that friends and family members can provide support and help.”