Affiliate links on Android Authority may earn us a commission. Learn more.

How smartphone cameras work - Gary explains

Now that smartphones have mostly replaced the point and shoot camera, mobile companies are scrambling to compete where the old imaging giants reigned supreme. In fact, smartphones have completely dethroned the most popular camera companies in photo communities at large like Flickr: which is a big deal.

But how do you know which cameras are good? How do these tiny cameras work, and how do they seemingly squeeze blood from a stone to get good images? The answer is a lot of seriously impressive engineering, and managing the shortcomings of tiny camera sensor sizes.

How does a camera work?

With that in mind, let’s explore how a camera works. The process is the same for both DSLRs and smartphone cameras, so let’s dig in:

- The user (or smartphone) focuses the lens

- Light enters the lens

- The aperture determines the amount of light that reaches the sensor

- The shutter determines how long the sensor is exposed to light

- The sensor captures the image

- The camera’s hardware processes and records the image

Most of the items on this list are handled by relatively simple machines, so their performance is dictated by the laws of physics. That means that there are some observable phenomena that will affect your photos in fairly predictable ways.

For smartphones, most of the problems will arise in steps two through four because the lens, aperture, and sensor are very small—and therefore less able to get the light they need to get the photo you want. There are often tradeoffs that have to be made in order to get usable shots.

What makes a good photo?

I’ve always loved the “rain bucket” metaphor of photography that explains what a camera needs to do in order to properly expose a shot. From Cambridge Audio in Color:

Achieving the correct exposure is a lot like collecting rain in a bucket. While the rate of rainfall is uncontrollable, three factors remain under your control: the bucket’s width, the duration you leave it in the rain, and the quantity of rain you want to collect. You just need to ensure you don’t collect too little (“underexposed”), but that you also don’t collect too much (“overexposed”). The key is that there are many different combinations of width, time and quantity that will achieve this… In photography, the exposure settings of aperture, shutter speed and ISO speed are analogous to the width, time and quantity discussed above. Furthermore, just as the rate of rainfall was beyond your control above, so too is natural light for a photographer.

When we talk about a “good” or “usable” photo, we’re generally talking about a shot that was exposed properly—or in the metaphor above, a rain bucket that’s filled with the amount of water you want. However, you’ve probably noticed that letting your phone’s automatic camera mode handle all the settings is a bit of a gamble here: sometimes you’ll get a lot of noise, other times you’ll get a dark shot, or a blurry one. What gives? Setting aside the smartphone angle for a bit, it’s useful to understand what confusing numbers in the spec sheets mean before we proceed.

How does a camera focus?

Though the depth of field in a smartphone camera’s shot is typically very deep (making it very easy to keep things in focus), the very first thing you need the lens to do is move its focusing element to the correct position to get the shot you want. Unless you’re using a phone like the first Moto E, your phone has an autofocus unit. For the sake of brevity, we’ll rank the three main technologies by performance here.

- Dual-pixel

Dual-pixel autofocus is a form of phase detect focus that uses a far greater number of focus points across the entire sensor than traditional phase-detect autofocus. Instead of having dedicated pixels to focusing, each pixel is comprised of two photodiodes that can compare subtle phase differences (mismatches in how much light is reaching opposite sides of the sensor) in order to calculate where to move the lens to bring an image into focus. Because the sample size is much higher, so too is the camera’s ability to bring the image into focus quicker. This is by far the most effective autofocus tech on the market. - Phase-detect

Like dual-pixel AF, phase detect works by using photodiodes across the sensor to measure differences in phase across the sensor and then moves the focusing element in the lens to bring the image into focus. However, it uses dedicated photodiodes instead of using a large number of pixels—meaning that it’s potentially less accurate and definitely less fast. You won’t notice much of a difference, but sometimes a fraction of a second is all it takes to miss a perfect shot. - Contrast detect

The oldest tech of the three, contrast detection samples areas of the sensor and racks the focus motor until a certain level of contrast from pixel to pixel is reached. The theory behind this is: hard, in-focus edges will be measured as having high-contrast, so it’s not a bad way for a computer to interpret an image as “in focus.” But moving the focus element until the maximum contrast is achieved is slow.

What’s in a lens?

Unpacking the numbers on a spec sheet can be daunting, but thankfully these concepts aren’t as complicated as they may seem. The main focus (rimshot) of these numbers typically encompass focal length, aperture, and shutter speeds. Because smartphones eschew the mechanical shutter for an electronic one, let’s start with the first two items on that list.

While the actual explanation of focal length is more complicated, in photography it refers to the equivalent angle of view to the 35mm full-frame standard. While a camera with a small sensor may not actually have a 28mm focal length, if you see that listed on a spec sheet, it means that the image you get on that camera will have roughly the same magnification as a full frame camera would with a 28mm lens. The longer the focal length, the more “zoomed in” your shot is going to be; and the shorter it is, the more “wide” or “zoomed out” it is. Most human eyes have a focal length of roughly 50mm, so if you were to use a 50mm lens, any snapshot you took would be roughly the same magnification as what you see normally. Anything with a shorter focal length will appear more zoomed out, anything higher will be zoomed in.

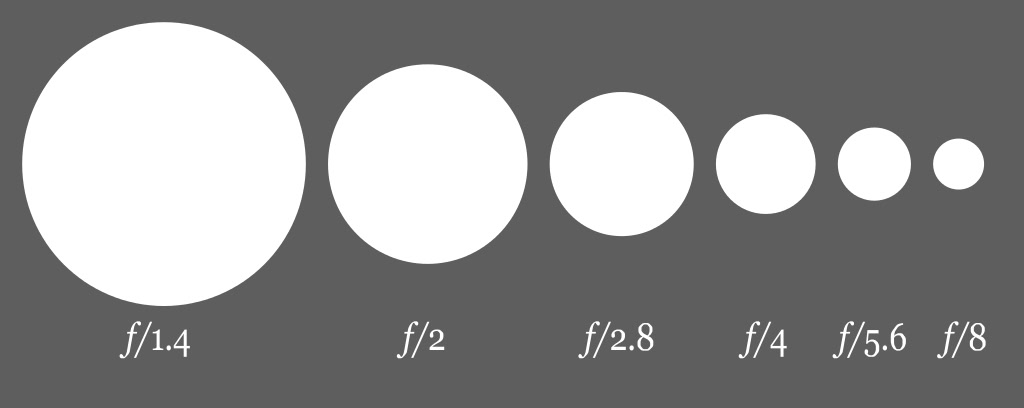

Now for the aperture: a mechanism that restricts how much light passes through the lens and into the camera itself in order to control what’s called depth of field, or the area of the plane that appears in focus. The more your aperture is closed in, the more of your shot will be in focus, and the more open it is, less of your total image will be in focus. Wide open apertures are prized in photography because they allow you to take photos with a pleasingly blurry background, highlighting your subject—while narrow apertures are great for things like macro photography, landscapes, etc.

So what do the numbers mean? In general, the lower the ƒ-stop is, the wider the aperture is. That’s because what you’re reading is actually a mathematical function. The ƒ-stop is a ratio of the focal length divided by the aperture opening. For example, a lens with a 50mm focal length and an opening of 10mm will be listed as ƒ/5. This number tells us a very important piece of information: how much light is making it to the sensor. When you narrow the aperture by a full “stop”—or power of the square root of 2 (ƒ/2 to ƒ/2.8, ƒ/4 to ƒ/5.8 etc)—you’ll be halving the light gathering area.

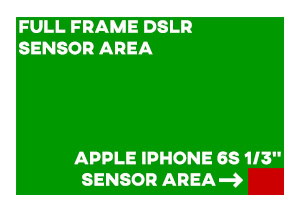

However, the same aperture ratio on differently-sized sensors don’t let in the same amount of light. By figuring out the diagonal measurement of the diagonal of a 35mm frame and dividing it by your sensor’s diagonal measurement, you can roughly work out how many stops you need to increase the ƒ-number on your full frame camera to see what your depth of field will look like on your smartphone. In the case of the iPhone 6S (sensor diagonal of ~8.32mm)—with an aperture of ƒ/2.2—its depth of field with be roughly equivalent to what you’d see in a full-frame camera set to ƒ/13 or ƒ/14. If you’re familiar with the shots an iPhone 6S takes, you know that means very little blur in your backgrounds.

Electronic shutters

After the aperture, shutter speed is the next important exposure setting to get right. Have it too slow and you’ll get blurry images, and have it too fast and you run the risk of underexposing your snap. While this setting is handled for you by most smartphones, it’s worthy of discussion anyway so you understand what might go wrong.

Much like the aperture, shutter speed is listed out by “stops,” or settings that mark an increase or decrease in light gathering by 2x. A 1/30th second exposure is a full stop brighter than a 1/60th sec. exposure, and so on. Because the main variable you’re changing here is the time the sensor is recording the image, the pitfalls of choosing the wrong exposure here are all related to recording an image for too long or too short. For example, a slow shutter speed may result in motion blur, while a fast shutter speed will seemingly stop action in its tracks.

Because the main variable you're changing here is the time the sensor is recording the image, the pitfalls of choosing the wrong exposure here are all related to recording an image for too long or too short.

Given that smartphones are very tiny devices, it shouldn’t be any surprise that the last mechanical camera part before the sensor—the shutter—has been omitted from their designs. Instead, they use what’s called an electronic shutter (E-shutter) to expose your photos. Essentially, your smartphone will tell the sensor to record your scene for a given time, recorded from top to bottom. While this is quite good for saving weight, there are tradeoffs. For example, if you shoot a fast-moving object, the sensor will record it at different points in time (due to the readout speed) skewing the object in your photo.

The shutter speed is usually the first thing the camera will adjust in low light, but the other variable it will try to adjust is sensitivity—mostly because if your shutter speed is too slow, even the shake from your hands will be enough to make your photo blurry. Some phones will have a compensation mechanism called optical stabilization to combat this: by moving the sensor or lenses in certain ways to counteract your movements, it can eliminate some of this blurriness.

What is camera sensitivity?

When you adjust camera sensitivity (ISO), you’re telling your camera just how much it needs to amplify the signal it records in order to make the resulting picture bright enough. However, the direct consequence of this is increased shot noise.

Ever look at a photo you took, but it has a ton of multicolored dots or grainy-looking errors all over the place? That’s the expression of Poisson Noise. Essentially, what we perceive to be brightness in a photo is a relative level of photons hitting the subject, and getting recorded by the sensor. The lower the amount of actual light hitting the subject, the more the sensor has to apply gain to create a “bright” enough image. When this happens, tiny variations in pixel readings will be made much more extreme—making noise more visible.

Now, that’s the main driver behind grainy pictures, but it can come from things like heat, electromagnetic (EM) interference, and other sources. You can expect a certain dropoff in image quality if your phone overheats, for example. If you want less noise in your photos, the go-to solution is usually to grab a camera with a larger sensor because it can capture more light at once. More light means less gain needed to produce a picture, and less gain means less noise overall.

As you can imagine, a smaller sensor tends to display more noise because of the lower levels of light it can collect. It’s a lot tougher for your smartphone to produce a quality shot with the same amount of light than it is for a more serious camera because it has to apply a lot more gain in more situations to get a comparable result—leading to noisier shots.

Cameras will usually try to fight this in the processing stage by using what’s called a “noise reduction algorithm” that attempts to identify and delete noise from your photos. While no algorithm is perfect, modern software does a fantastic job of cleaning up shots (all things considered). However, sometimes over-aggressive algorithms can reduce sharpness accidentally. If there’s enough noise, or your shot is blurry, the algorithm will have a tough time figuring out what’s unwanted noise and what’s a critical detail, leading to blotchy-looking photos.

More megapixels, more problems

When people look to compare cameras, a number that stands out in the branding is just how many megapixels (1,048,576 individual pixels) the product has. Many assume that the more megapixels something has, the more resolution it’s capable of, and consequently the “better” it is. However, this spec is very misleading because the pixel size matters a great deal.

Modern digital camera sensors are really just arrays of many millions of even tinier camera sensors. However, there’s an inverse relationship between number of pixels and pixel size for a given sensor area: the more pixels you cram in, the smaller—and therefore less capable of gathering light—they are. A full-frame sensor with a light-collecting surface area of about 860 square millimeters will always be able to gather more light with the same resolution sensor as the ~17 square millimeter iPhone 6S sensor because its pixels will be much larger (roughly 72µm versus 1.25µm for 12MP).

On the other hand, if you’re able to make your individual pixels relatively large, you can collect light more efficiently even if your overall sensor size isn’t all that big. So if that’s the case, how many megapixels is enough? Far less than you think. For example, a still from a 4K UHD video is roughly 8MP, and a full HD video image is only about 2MP per frame.

But there is a benefit to increasing resolution a little bit. The Nyquist Theorem teaches us that an image will look substantially better if we record it at twice the maximum dimensions of our intended medium. With that in mind, a 5×7″ photo in print quality (300 DPI) would need to be shot at 3000 x 4200 pixels for best results, or about 12MP. Sound familiar? This is one of the many reasons why Apple and Google seem to have settled on the 12MP sensor: it’s enough resolution to oversample most common photo sizes, but low-res enough to manage the shortcomings of a small sensor.

After the shot is taken

Once your camera takes the shot, the smartphone has to make sense of everything it just captured. Essentially, the processor now has to piece together all the information the sensor’s pixels recorded into a mosaic that most people just call “a picture.” While that doesn’t sound terribly exciting, the job is a little more complicated than simply recording the light intensity values for every pixel and dumping that into a file.

The first step is called “mosaicing,” or piecing the whole thing together. You may not realize it, but the image the sensor sees is backwards, upside-down, and chopped up into different areas of red, green, and blue. So when the camera’s processor tries to place each pixel’s readings in the correct spot, it needs to place it in a specific order that’s intelligible to us. With a Bayer color filter it’s easy: pixels have a tessellating pattern of specific wavelengths of light they’re responsible for, making it a simple task to interpolate the missing values between like pixels. For any missing information, the camera will dither the color values based on the surrounding pixel readings to fill in gaps.

But camera sensors aren’t human eyes, and it can be tough for them to re-create the scene as we remember it when we snapped the photo. Images taken right from the camera are actually pretty dull. The colors will look a bit muted, edges won’t be as sharp as you might remember them to be, and the filesize will be massive (what’s called a RAW file). Obviously, this isn’t what you want to share with your friends, so most cameras will add in things like extra color saturation, increase contrast around edges so the shot will look sharper, and finally compress the result so the file is easy to store and share.

Are dual cameras better?

Sometimes!

When you see a camera like the LG G6, or HUAWEI P10 with dual cameras, it can mean one of several things. In the case of the LG, it simply means it has two cameras of different focal lengths for wide and telephoto shots.

However, the HUAWEI’s system is more complicated. Instead of having two cameras to switch between, it uses a system of two sensors to create one image by combining a “normal” sensor’s output of color with a secondary sensor recording a monochrome image. The smartphone then uses data from both images to create a final product with more detail than just one sensor could capture. This is an interesting workaround to the problem of having only a limited sensor size to work with, but it doesn’t make a perfect camera: just one that has less information to interpolate (discussed above).

While these are merely the broad strokes, let us know if you have a more specific question about imaging. We have our share of camera experts on staff, and we’d love a chance to get more in depth where there’s interest!