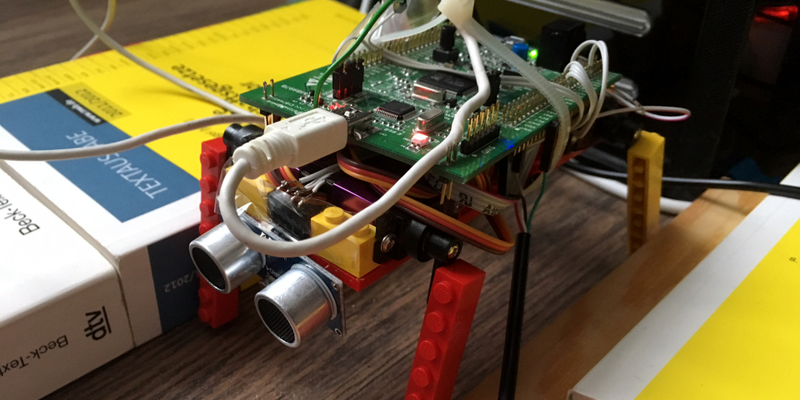

[Basti] was playing around with Artificial Neural Networks (ANNs), and decided that a lot of the “hello world” type programs just weren’t zingy enough to instill his love for the networks in others. So he juiced it up a little bit by applying a reasonably simple ANN to teach a four-legged robot to walk (in German, translated here).

While we think it’s awesome that postal systems the world over have been machine sorting mail based on similar algorithms for years now, watching a squirming quartet of servos come to forward-moving consensus is more viscerally inspiring. Job well done! Check out the video embedded below.

The details of the network are straightforward. In order to reduce the number of output states, each servo can only assume three positions. The inputs include the current and previous leg positions. It’s got a distance sensor on the front, so it can tell when it’s made progress, and that’s it. The rest is just letting it flail around for fifteen minutes, occasionally resetting its position.

This is all made possible by a port of the Fast ANN library to the Cortex M4 microcontrollers, which in turn required [Basti] to port a small read-only filesystem to the chips. Read through his blog for details, or jump straight to the code if that’s more your style.

We’d say that if you’re interested in learning about ANNs, a hands-on application like this is a fantastic motivator. If you’d like to brush up on the basics before diving in, check out [Al]’s two-part series (one and two) on the subject. Meanwhile, we leave you with videos of flailing zip-tied Lego bricks.

This could be a neat thing to have in autonomous bots when they get stuck and confused.

Maybe one can now build a walking model of ED-209?

“It would have been much easier if I had any f$%@$%#ing knees!”

“Why did they give me knees?!? Now I have twice as many degrees of freedom to optimize over! I’ll never learn how to operate this body.”

“You two: Stop wining and keep working, or else you’ll be shut down and put in boxes!

Looks like it is in some kind of toxic shock to start off, then…. Yay walking!!!!

The human neuro ecosystem has various initiators (DMT, limb-limiting pain thresholds, etc) and variable Semi-random saturation chemicals (Emotion varying and pain ignorance chemical deposits, collectively called hormones). This helps us not have a neuro-toxic like fit (as seen in the video above) during formation and during/after birth, gives us an initial personality and prepares the neuro system for the survival need for learning life long skills.

If those thresholds and chemical actions could be simulated as code inside a neuro network….. lets hope it doesn’t become Skynet.

Can you contact me on discord? I would love to discuss this more.

Nobody5050#8971

This is impressive. But it gets back to maybe the largest problem in neural networks, how the heck do you train the things? I’ve never seen any normal human readable documentation that didn’t involve oodles of matrix math or summations that went from minus infinity to plus infinity that explain this. Can someone please post a very simple explanation (like something “easy” -perhaps a thermostat controller) that clearly and explicitly explains the training process for coming up with the weights, as if I were a twelve-year-old program or writing it for the first time? That means no matrix math, and no ridiculous summations that can actually be solved in the real world.

Well, simply you can’t. Machine learning is a very complex topic that needs calculus and complex algebra. It’s one of the most difficult thing in computer science at the time and it needs a lot of study not only in the coding aspect but also in algebra and discrete mathematics.

That is a negative attitude and a little bit elitist. Scrolling up I see an non-mathematical explanation: “The details of the network are straightforward. In order to reduce the number of output states, each servo can only assume three positions. The inputs include the current and previous leg positions. It’s got a distance sensor on the front, so it can tell when it’s made progress, and that’s it.”

I remember a time when technology was new and outsiders would marvel – can you do this/that… [with a computer], and an elitist attitude always prevailed in the social realm.. scoffing at the clueless impossibility effort/reward ratio involved to accomplish something for some ‘user’ (perhaps non-mathematician) who’s function could likely be replaced with a script (which ultimately is the goal.. although we value the security of our own relevance). Fortunately technology prevailed, the impossible became possible, though it has always been a popular and quickly forgiven position to remain conservatively dismissive. Everyone loves that guy who can explain why something is impossible, even while people are doing it.

Machine learning can, and inevitably will, bring marvelous results from a basically brain-dead search query.. along with a dash of auto suggest, peppered with the learned preferences of the the ‘programmers’ search history, combined with similar users positive experiences. It will be delivered under a christmas tree to some unappreciative brat and ultimately witness said brat’s graduation, accomplishments , and demise.

Most current training processes are just numerical optimization of a bunch of parameters, so all the math you usually see in explanations is because of that. However, here is a hands-on way to think of it (and I seem to recall this was actually done with analog implementations of neural nets decades ago): you have a black box (the neural network) with a bunch of knobs on it for adjusting parameters, some mechanism to set the inputs to the network (one or more temperature measurements in your house, in your example), and indicators showing the network output.

One way you could train such a thing is to have a list of temperature input values, and for each set of input values you choose what you want the controller output to be. Then you set the inputs of the controller to the first set of temperatures in your list, make small changes to the knobs one at a time, and note which of these tweaks causes the output to move closest to the output value you want for those inputs. Then you apply that best tweak, and move on to the second set of inputs.

Later, rinse, repeat, until the output is correct (or close enough to correct) for all your given inputs.

(Was writing while this came in. Not bad!)

“Can someone please post a very simple explanation for picking the weights?” I doubt it. :) But here goes:

In supervised learning, like this case, you have an idea of whether any change to the weights makes the network perform better or worse. So you make a change to the weights and see what happens. And repeat. That’s lumping a whole bunch of optimization techniques together, and is a bit sloppy, but it’s the basic idea. If you’re happy at an abstract mathematical level, it’s just optimizing a function (how well the model fits the data) over a ton of parameters — the weights — and any way you want to do that is fine by me.

You can think of optimization as hill-climbing, going NS,EW and trying to get to the top of a hill. Take a step. Did I go uphill? If so, keep going. If not, turn around and try another direction. With gradient descent / backpropagation, you end up with the matrices — that you don’t like — telling you the direction to take the step beforehand. When you can’t take any more steps that move you uphill, you’re at the top. Woot!

Another way that works (simulated annealing) is to take random steps and undo if they were bad. You start out taking big random steps and “cool down” to smaller steps in the end. It takes longer, but making the “wrong” steps can help keep you from getting stuck on the first hill you find, which may not be the tallest mountain. You can mix the random stepping with gradient descent, and more.

There are many optimization methods. The devil here is in the details, and the classic ANN model was successful expressly because it is relatively easy to optimize the weights — using those matrices. With gigantic parameter spaces, this matters even more.

So… yeah. There’s no one way, and there’s certainly no easy way, to find the maximum of a function of many many parameters. But in the end, it’s just finding the best fit for a target function. (Picking the function in the first place is another kettle of fish.)

(In this example, the ‘bot knows whether it’s moved forward or not — the ultrasonic sensor. So when it takes a step with weights that move it forward, it keeps those weights and tries to improve on them with the next iteration.)

The power of neural networks is that you, the human, don’t need to go through and train them manually (like you would with a PID controller, for example). The weights and biases are updated automatically through a pretty clever algorithm (Backpropagation) that takes advantage of the chain rule from calculus and uses a few matrix multiplications to implement.

At the heart of neural networks is an optimization problem that searches through the space of possible weights and biases to find the ones that best match some performance criteria. The problem with many optimization problems however is that while it is easy to test how well the current solution performs, it is often difficult (i.e. computationally expensive) to decide where the next step in the search should be in order to get to the desired output the fastest. Normally, you would need to increment all of your variables (the weights and biases of the NN) one at a time to see how the overall performance of the system is influenced by each one. Then you would take a “step in the direction” of greatest improvement and then start the process all over again from the new “position” in the variable space. The Backpropagation algorithm replaces all of this heavy computational work with a few matrix multiplications–which computers can perform very quickly–in order to find the best next possible step to make in the search space.

All this is to say: matrix math IS real world stuff and without it there really is no training neural networks. With that said, most neural network libraries can be used pretty easily without knowing all of the math behind them. Unfortunately, it can be pretty frustrating to build NN architectures that actually solve the problem that you are trying to solve and this frustration is only going to be alleviated by knowing a bit of the math going on underneath the surface.

You don’t. If I recall correctly from the old MIT work in the 90’s. They had a little trailing wheel hanging off the back for ‘training’. Now we have accelerometers and GPS/ on a chip to detect forward movement.

The overly simplistic is it moves legs at random and you make sure its random. Eventually more than one leg just happens to move backward at the same time resulting forward movement. You then weight that movement higher (assign it more likes/stars whatever) and that weight begins to salt the randomness of the other legs until 3 then 4 happen to move at the same time.

These old guys http://www.ai.mit.edu/projects/hannibal/hannibal.html

I still have an old magazine somewhere with them on the cover.

My version was with pic microcontrollers, we had just gotten a picstartplus in school and I ordered Parallax’s pic-pgm at home. I was going to use a pic for each ‘neuron’ with one for each joint and one for sensors on each appendage. Each leg operated on its own network which then received commands from a central network with one ‘router’ at each leg. This gave me a reflex ability. if a toe sensor detected a hit it would send the stop across its local network causing that leg to stop. A command like stop was high enough priority to get routed to the central network which in turn caused the rest of the legs to stop and interrupt the central processor.

This network was intended to free up the central processor (an MC68HC000 at the time) so it didn’t have to control the legs. It would place the walk command on the central network and then each leg would see the walk command and coordinate the individual hip/knee/foot movement across its local bus and coordinate the timing of walking with the other legs. The reflex action was so the main cpu didn’t have to think about reading sensors or reacting quickly to an obstacle.

Thought about doing something like that! Did it work or did it constantly end up out of sync

Embedded.fm podcast did an episode that involved neural nets a little while back. http://embedded.fm/episodes/174

Also has info on machine learning and some links.

Should it not be titled:

Train a neural network to walk a robot.

Hi, this is an amazing job ! Well done !! The idea of having as inputs the current and previous leg positions is really great, and make completely sense, and already answer the question I was looking for. But I am still puzzled by the distance. Are you saying it is an input ? In order to have the back propagation working well, it shouldn’t be a input, but more a condition on the back propagation, no ? Like :

while (training)

neural.feedForward(randomMove);

if (distance > lastMaxDist)

neural.backpropagate(lastMoveBecauseItWasGood);

lastMaxDistance = distance

else

// Don’t propagate is was a bad move

end while

Could you try to bring me some explanations on this please,

I wish someone had answered your question because that is exactly what I am trying to do now with my robot. I have the distance as another input but not sure how to set up the output or even understand entirely what the output would be.

Is this approach DQN?

Awesome. I am trying tobmake also my quadrupede learn how to walk. I am using tensorflow.js on web browser and build a2c model (actor critic). What are your ACTION ? On of the 3 positions for each leg ? I have also a gyroscope maybe could be used as INPUTS and REWARD