This is the second in the series of our predictive analytics posts. In the first article we presented the concept of getting more clicks without increasing rank. Now we’ll dive deeper into data analysis and practical spread-sheeting tips.

Why is it so important to understand average click-through rates for a website?

Every website, every page, every phrase, is different. Don’t use CTR studies performed by others, on other websites. They’re anecdotal at best. It’s best to use your own data to understand your CTR distribution curve.

Understanding average CTR values for a website allows us to set its basic performance expectations. This helps highlight things that have gone horribly wrong and outstandingly well.

Here’s what I mean

- Users who searched for “Flux Capacitor” found my website on position 4 on average.

- Out of 1000 impressions, I had 100 clicks for that search query.

- That’s 10% CTR on an average rank of 4.

On its own this information doesn’t tell me anything useful, but here’s what happens if I have the expected CTR:

- Expected CTR: 15%

- Actual CTR: 10%.

- Traffic Loss: 5%

Now, I know that I could get up to 50 extra clicks if I bring the CTR value for this query to its expected value – the site average.

Calculating Expected CTR

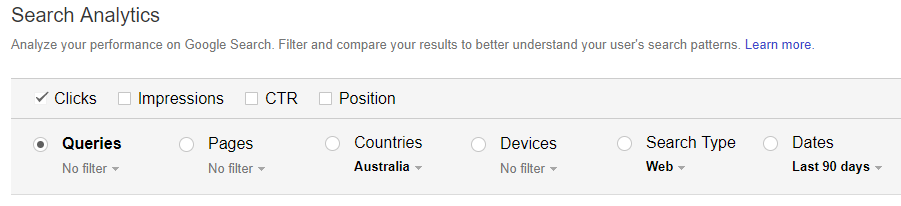

The first step in the process is to download all available traffic data from Google’s Search Console:

Select country (if relevant) and date range before you download the data.

TIP: For smaller websites I usually select the widest available date range (currently limited to 90 days), but remember that we’re now working with 3 months worth of data. Just make sure you don’t count the traffic as “monthly” later down the track.

Bypassing Google’s Export Limit

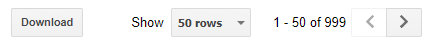

Now, unless you’re using an API, your data export will be limited to 1000 rows.

This is annoying if you’re working with larger websites and have lots of data. We want our study to be statistically valid. So, the more data there is, the better the accuracy.

I have a workaround for this though. Use a filter on the “Queries”, literally put letter “a” as an exclusion. What happens next is that you’ve got the same 999 export, but this time no word in the list contains a letter “a”. Same can be done for other letters and even numbers.

- Bad News: You have to download each one separately.

- Good News: There’s a way to merge them all quickly and easily!

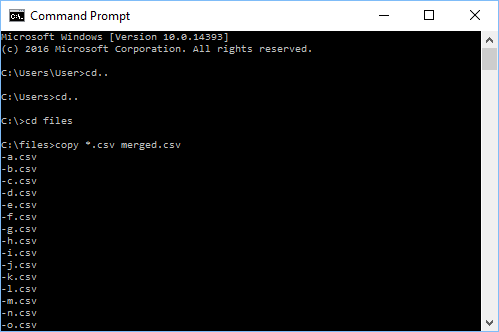

Merging CSVs

Place all your CSV files in the same directory, open CMD at that location and run the following:

copy *.csv merged.csv

If you use a Mac: cat *.csv >merged.csv

All of your CSV exports will be merged in a single file called “merged.csv”.

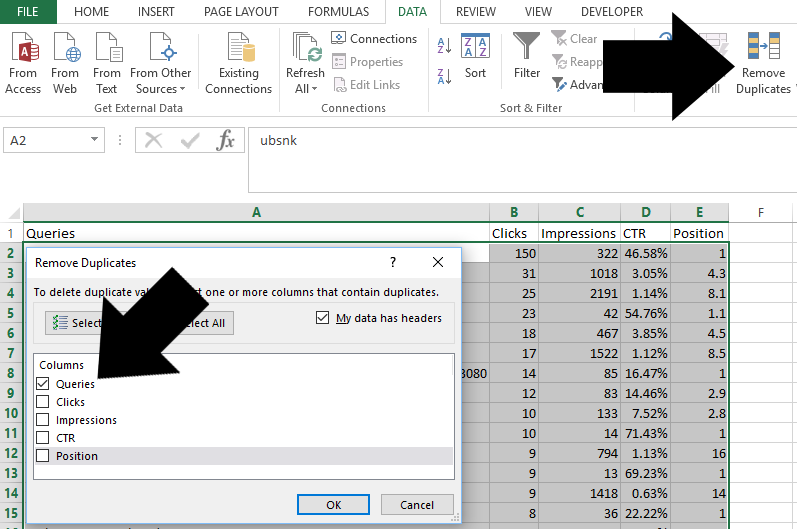

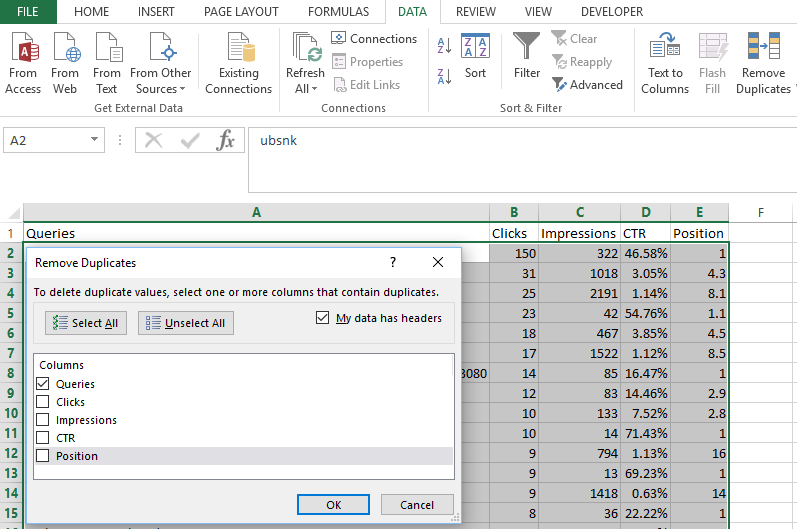

Deduplicating CSV

The next step is to remove all duplicate lines. For example, in Excel, go to Data > Remove Duplicates, and select “Queries” as de-dupe criteria:

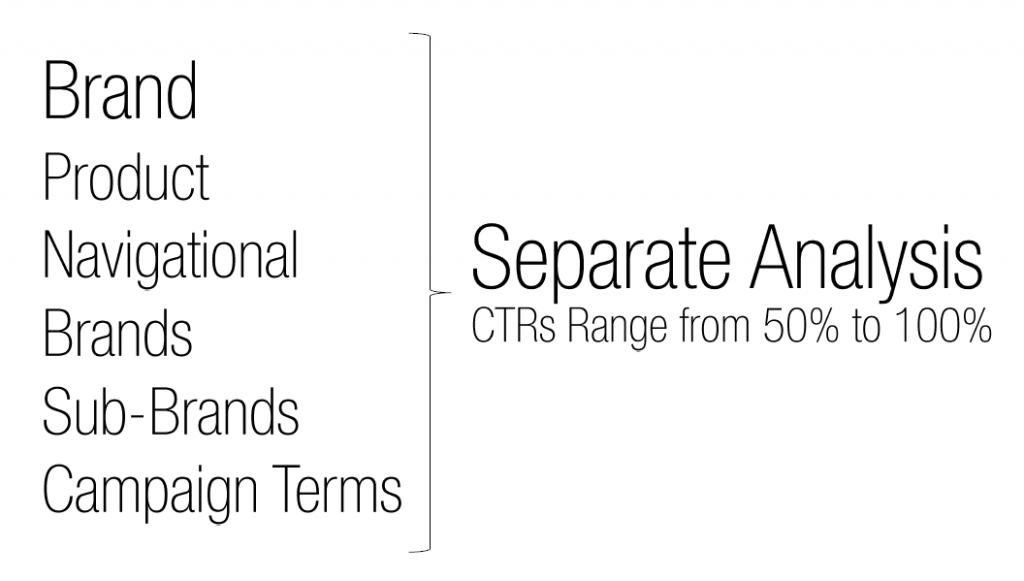

Filtering Out Brand Terms

Brand, product and campaign terms tend to have higher than average CTR and any such groups of terms must be analysed separately.

To remove these terms from your study, use your favourite spreadsheet and method, for example in Excel:

Counting Rank Instances

Rank instances is a number of times any of our queries have ranked on a certain position.

For example:

- 45 instances

- 88 instances

- 129 instances

- 60 instances

- 223 instances

- 211 instances

- 426 instances

- 350 instances

- 444 instances

- 387 instances

So as per above our website has appeared at #1 spot in Google 45 times, at #2 88 times and so forth.

How is this value useful?

Rank instances can be used as a data-reliability metric.

For example:

Our CTR averages on position 1 in Google is 45% and the number of rank instances is 2:

- “Query A” CTR: 40%

- “Query B” CTR: 50%

- Average: 45%

We wouldn’t be able to trust the average CTR of 45% as our “expected CTR” because the average is based on only two queries. My recommendation is to use a threshold of 10 instances or more if possible for high reliability, 5 to 9 will bring an occasional deviation, while anything below 5 returns frequent deviations.

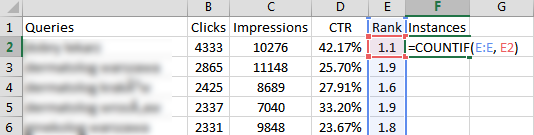

Formula Example

=COUNTIF(E;E, E2)

We’re applying the above formula in the column F of our spreadsheet, next to each rank value:

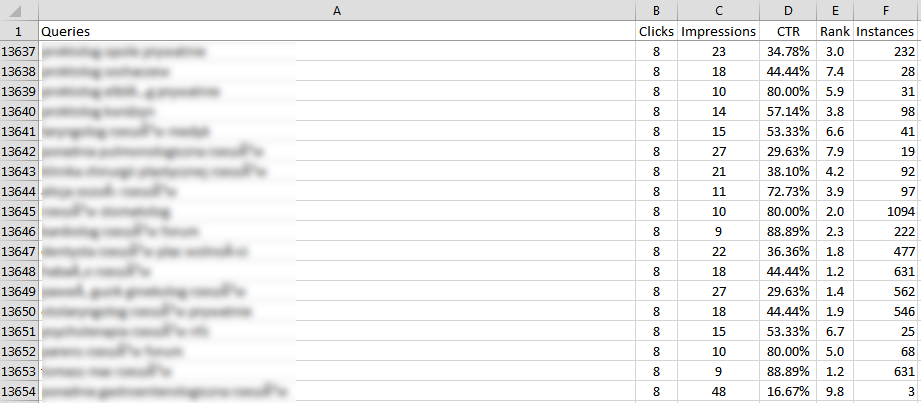

Drag the formula to apply to the rest of your cells. Observe the first line:

Each CTR value in 232 instances of search queries on an average rank of 3.0 contributes towards the CTR average of rank 3.0.

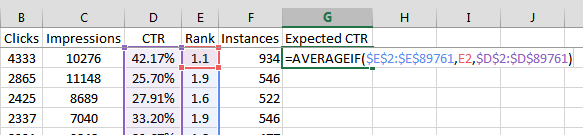

Finding average CTR for each average rank.

We’ll use =AVERAGEIF to do this in the column next to our instances:

In the above example, we’re conditionally averaging all CTR values (D) of matching rank values (E).

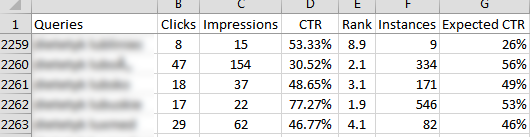

The result is a column next to each of our queries with its expected CTR value (G). If we observe the first row (2259) it’s obvious that the actual CTR beats the expected CTR. This snippet is doing something so good it’s worth investigating. In the second row (2260) we’re seeing an underperformer. The last row (2263) shows a query CTR on par with calculated expectation.

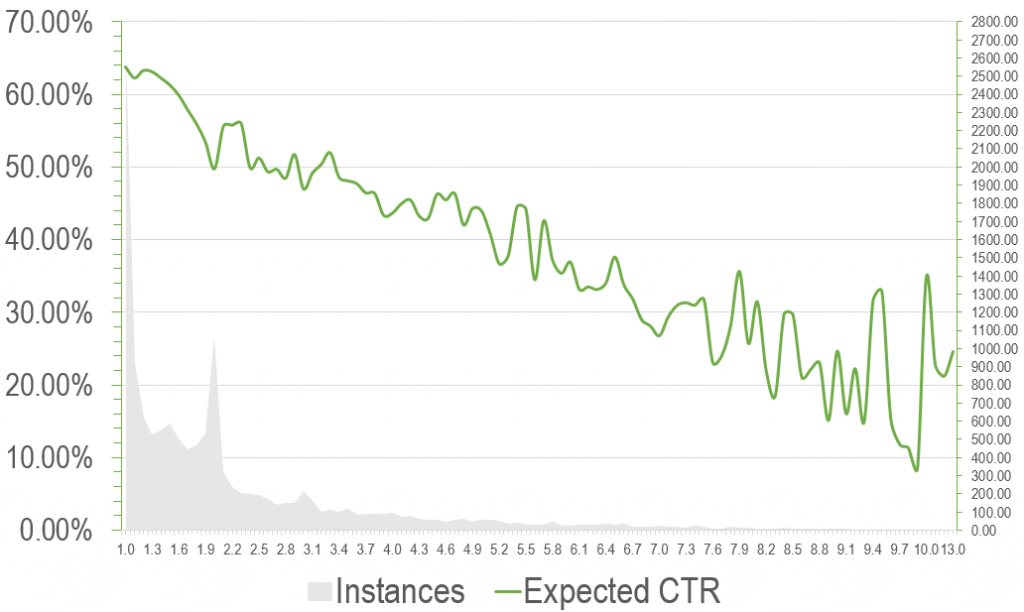

CTR Visualisation

I’ve calculated CTR averages for countless websites, but this one is my favourite! I spoke to the guy in charge of this website and confirmed that nothing went wrong with my calculations. They’re doing that well in SERPs!

Calculating Traffic Potential

In the next article we’ll be calculating lost and gained traffic, the financial impact of SEO and how to make strategic decisions based on your data.

Dan Petrovic, the managing director of DEJAN, is Australia’s best-known name in the field of search engine optimisation. Dan is a web author, innovator and a highly regarded search industry event speaker.

ORCID iD: https://orcid.org/0000-0002-6886-3211