Over the past several years, the term “big data” has frequently been mentioned, often in passing, as a vague and generalized concept that represents the accumulation of inconceivable amounts of information demanding storage, management, and analysis—often ranging from a few dozen terabytes to several petabytes (1015) in a single data set1. In essence, the “big data” movement seeks to capture overarching patterns and trends within these sets, where current software tools and strategies are incapable of handling this volume of information. Big Data is particularly relevant in the neurosciences, where a vast amount of high-dimensional neural data arising from incredibly complex networks is being continuously acquired.

As with Big Data as a whole, one of the central challenges of modern neuroscience is to integrate and model data from a variety of sources to determine similarities and recurring themes between them2. That is, the vast array of techniques used in neuroscience–from GWAS to viral tracing to electrophysiology to fMRI to artificial network simulations–generates data sets with varying characteristics, dimensions, and formats3,4. In order to meaningfully combine data from each of these sources, we need a comprehensive and universal strategy for integrating findings of all types and from all scales into a simple and cohesive story.

Drs. Peiran Gao and Surya Ganguli of Stanford University highlight the difficulty in extracting meaningful trends from big neural data sets in their review titled “On simplicity and complexity in the brave new world of large-scale neuroscience”. They emphasize the particular hurdle of extracting a coherent conceptual understanding of neural behavior and emergent cognitive functions from circuit connectivity. Moreover, they offer insight into what it might mean to truly “understand” the brain on every level of in all of its hierarchical complexity.

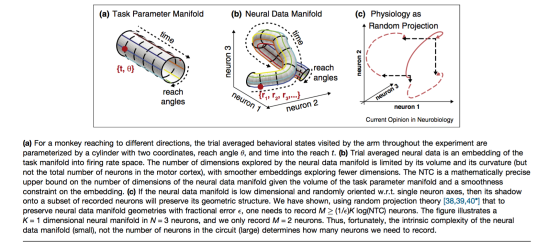

One of the central ideas highlighted in Gao and Ganguli’s review is the notion of Neuronal Task Complexity (NTC) that uses neuronal population autocorrelation across various parameters to place bounds on the dimensionality of neural data5. Thus, NTC seeks to parse meaningful differences in neuronal firing patterns from random fluctuations in signaling: in a broader sense, it attempts to increase the signal-to-noise ratio (SNR) to extract relevant information about circuit dynamics. In characterizing NTC, the authors demonstrate that it can be used to derive a general understanding of neuronal circuit behavior with relatively little information. That is, recording from more neurons in the brain does not necessarily result in a better encapsulation of the phenomena we seek to explain–more data is not always better—and NTC enables us to better quantify the experimental data needed to draw these broad conclusions.

Using NTC, we can better clarify the distinction between effective and excessive data collection, and hone in on the intrinsic principles that govern our cognition without gathering data that needlessly cloud our ability to distinguish these complexities. In the figure below from Gao and Ganguli’s review, the essential components of NTC are explained using neuronal modulation of behavioral states as an experimental example:

In addition to describing the essential components and uses of NTC as a means to measure the complexity of neural data given particular task parameters and assumptions, the authors also explain the broader meaning of what NTC tells us: that rather than simply focusing number of neurons we record from, we instead need to develop more intricate and clearly-defined behavioral experiments that will cause predictable and observable alterations in neural activity. This will help to ensure that any significant patterns we see can be meaningfully interpreted in-context and effectively incorporated into a broader perspective of how neuronal firing patterns give rise to behavior and cognition.

By underscoring the need for interaction between experimental work, data analysis, and theory behind the operation and dynamics of neural circuitry, Gao and Ganguli argue that gaining a comprehensive understanding of the brain will require a communal effort and inputs from all areas of neuroscience. By extension, the ability to test the validity and interaction between several models at once will be indispensable in determining which ones best align with acquired data and with one another. By comparing multiple models from all sub-fields of neuroscience, a more complete and accurate understanding of the brain can be derived. Thus, in catalyzing the generation of broader and more accurate conceptual frameworks, both artificial simulations of neural activity and adoption of a wider range of experimental techniques will enable us to gain a more complete understanding of how the brain makes us who we are.

Marley Rossa is a first-year graduate student currently rotating in Jeff Isaacson’s lab. She is, as of now, content with studying the electrophysiology of individual neurons and will leave the hardcore petabyte-level analysis to the more computationally-inclined.

Sources

1Ibrahim; Targio Hashem, Abaker; Yaqoob, Ibrar; Badrul Anuar, Nor; Mokhtar, Salimah; Gani, Abdullah; Ullah Khan, Samee (2015). “big data” on cloud computing: Review and open research issues”. Information Systems. 47: 98–115.

2Stevenson IH, Kording KP: How advances in neural recording affect data analysis. Nat Neurosci 2011, 14:139-142.

3Chung K, Deisseroth K: Clarity for mapping the nervous system. Nat Methods 2013, 10:508-513.

4Prevedel R, Yoon Y, Hoffmann M, Pak N: Simultaneous whole- animal 3D imaging of neuronal activity using light-field microscopy. Nat Methods 2014, 11:727-730.

5Gao P, Ganguli S: On simplicity and complexity in the brave new world of large-scale neuroscience. Curr Opin in Neurobiology 2015, 32: 148–155.

6Gao P, Trautmann E, Yu B, Santhanam G, Ryu S, Shenoy KV, Ganguli S: A theory of neural dimensionality and measurement. Computational and Systems Neuroscience Conference (COSYNE). 2014.

7Gao P, Ganguli S: Dimensionality, Coding and Dynamics of Single-Trial Neural Data. Computational and Systems Neuroscience Conference (COSYNE). 2015.