Display resolutions are growing, but the graphical processing power to serve these displays with content isn’t growing at the same rate. Enter foveated rendering, a technique commonly held as one of the next logical steps in making VR headsets more immersive. While the idea itself is well understood; track the user’s eye movements and only render the most central area to the eye in high-definition, leaving the rest to fuzz in the peripheral unnoticed, it isn’t a fully solved problem at this stage. To that effect, Google Software Engineer Manager Behnam Bastani and Daydream Software Engineer Eric Turner recently posted a quick overview of a few techniques in the company’s nascent foveated rendering pipeline for AR/VR. Here’s a slightly less technical version:

Through their research, Bastani and Turner say the current limitations in tackling foveated rendering are content creation, data transmission, latency, and enabling interaction with real world object while in augmented reality. To help address some of these issues, Bastani and Turner outline three major research areas in the new pipeline: foveated rendering to reduce compute per pixel, foveated image processing to reduce visual artifacts, and tackling latency by lowering the amount of bits per pixel transmitted.

Traditional foveation techniques, which divide a frame into several discrete spatial resolution regions, tend to cause noticeable artifacts like aliasing in lower resolution areas of content. This, predictably, ruins the overall desired effect—a peripheral area that should never be noticeably ‘off’ in any way. The gif below shows a full resolution rendering on the left, and a traditional foveated rendering on the right (with high-acuity region in the middle).

[gfycat data_id=”NeighboringExemplaryCob”]

Google’s researchers introduce two methods to attack these artifacts: Phase-Aligned Foveated Rendering and Conformal Foveated Rendering. After that, you’ll read about two more techniques to make displaying content more efficient.

Phase-Aligned Foveated Rendering

In Phase-Aligned rendering, the researchers reduce artifacts by aligning frustums—a part of the scene’s geometry—rotationally to the world (e.g. always facing north, east, south, etc.) and not to the current frame’s head-rotation, as it’s conventionally done.

It’s then upsampled, and then reprojected to the display screen to compensate for the user’s head rotation, which according to their research, reduces flicker. You can see some aliasing still remains in the right panel, but it’s much less prominent and ‘slower’ to materialize than the much more choppy traditional scene on the left.

[gfycat data_id=”InbornLimitedKingbird”]

The researchers say this method is more expensive to compute than traditional methods because it requires two rasterization passes, or drawing the image for the display, but also allows you to gain a “net savings” by rendering the low-acuity areas at a lesser quality. A downside is that it still produces a distinct boundary between the high and low-acuity regions, which could be noticeable.

Conformal Rendering

Because the human eye features a smooth transition from low to high-acuity the closer you get to the fovea centralis (the highest resolution part of the eye), you can take advantage of this by trying to match this transition exactly in the final image.

Compared to other foveation techniques, this is said to reduce the total number of pixels computed, and also prevents the user from seeing a clear dividing line between high-acuity and low-acuity areas. Bastani and Turner say these benefits allow for “aggressive foveation … while preserving the same quality levels, yielding more savings.”

Unlike phase-aligned rendering, conformal rendering only requires a single pass of rasterization. A downside to conformal rendering compared to phase-alignment is that aliasing artifacts still flicker in the periphery, which may not be great for applications that require high visual fidelity.

[gfycat data_id=”UnlawfulDamagedIcelandicsheepdog”]

You can see conformal rendering on the right, where content matched to the eye’s visual perception and HMD lens characteristics. The left shows the plane jane foveated rendering for comparison. Both methods use the same number of total pixels.

Foveated Image Processing

After rendering, some of the last things to do before putting AR/VR content in front of your eyeballs include image processing steps like local tone mapping, lens distortion correction, or lighting blending.

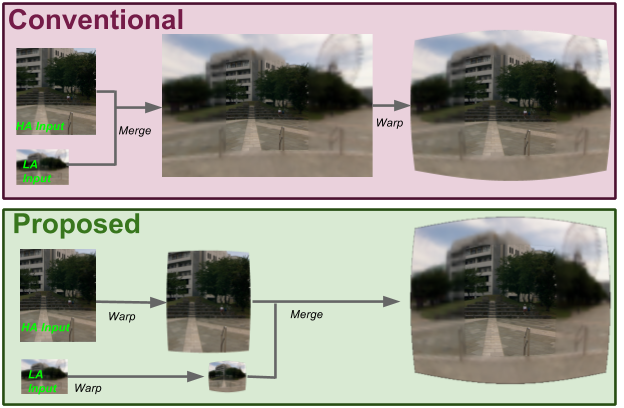

By running lens distortion correction on foveated content before upscaling, significant savings are gained with no additional perceptible artifacts. Below, you can see that although the proposed version has extra steps before merging the low and high-acuity images, it’s doing less work overall by being more efficient.

Foveated Transmission

Because Google focuses so much on mobile VR/AR applications, saving power is a serious issue to tackle head on. The researchers maintain their brand of ‘foveated transmission’ will save power and bandwidth by transmitting the bare minimum amount of data necessary to the display.

Power savings can be had by moving the more simple jobs of upscaling and merging frames to the display side and transmitting only the foveated rendered content itself. Complexity reportedly arises if the foveal region moves in tandem with eye-tracking. Temporal artifacts supposedly arise since compression used between the mobile system on a chip (SoC) and the display is not designed for foveated content (yet).

You can read the full research page here. It’s slightly more technical, and includes a few more images for reference.