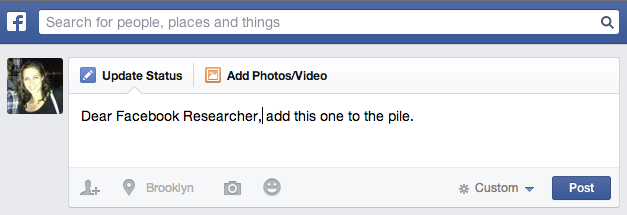

Facebook released a study (PDF) last week indicating that the company is moving into a new type of data collection in earnest: the things we do not say. For an analysis of self-censorship, two researchers at Facebook collected information on all of the statuses that five million users wrote out but did not post during the summer of 2012.

Facebook is not shy about the information it collects on its users. Certain phrasings in its data use policy have indicated before that it may be collecting information about what doesn’t happen, like friend requests that are never accepted. Capturing the failures of Facebook interactions would, in theory, allow the company to figure out how to mitigate them and turn them into “successes.”

Adam Kramer, a data scientist at Facebook, and Sauvik Das, a summer Facebook intern, tracked two things for the study: the HTML form element where users enter original status updates or upload content and the comment box that allows them to add to the discussion of things other people have posted. A “self-censored” update counted as an entry into either of those boxes of more than five characters that was typed out but not submitted for at least the next 10 minutes.

Das and Kramer tracked the activity of the random sample of five million users for 17 days in July 2012. The paper states that they studied the HTML form element interactions but “not the keystrokes or content.”

Over the course of those 17 days, 71 percent of the users typed out a status, a comment, or both but did not submit it. On average, they held back on 4.52 statuses and 3.2 comments.

In addition to that information, Das and Kramer took note of the users’ demographic information, “behavioral features,” and information on each user’s “social graph” like the average number of friends of friends or the user’s “political ideology” in relation to their friends’ beliefs. They used this information to study three cross sections with self-censorship: how the user’s political stance differs from the audience, the user's political stance and how homogenous the audience is, and the user’s gender related to the gender diversity of their network.

The demographic data suggested that people are more likely to self-censor when they feel their audience is hard to define. Posting an update to all of one’s friends, let alone one available to the entire public, is daunting because the audience is diverse. Users are less likely to self-censor a comment on someone else’s post, however, because the audience seems better-defined—they’re “succinct replies to a known audience,” the researchers write.

But highly specific audiences, like Facebook groups, also seemed to be tied to self-censorship. Das and Kramer speculate that this is because the group’s interests may be narrowly defined, and a poster may feel like they have to nail the right topic area.

Friend homogeneity was also linked with self-censorship. Men are more likely to self-censor than women, and even more so when more of their friends are male than female. People with “a diverse set of friends in fewer distinct communities actually self-censor less,” which Das and Kramer suggest means that “users more often initiate or engage in ongoing discussions with a diverse audience over a homogenous one.”

The authors suggest that “present audience selection tools are too static and content agnostic”—that is, the way users categorize Facebook friends happens along different lines than the ones determining how they share content.

The research is relevant to the methods of News Feed curating Facebook has been rolling out over the last couple of years. Facebook is no longer a static update hose; rather, it promotes content that it thinks users will be interested in based on how they use Facebook, from both friends and fan pages.

But those methods can’t to solve this problem of people shying from using Facebook, an event that sounds like one of Facebook’s biggest nightmares. It’s Facebook’s version of the shopping cart abandonment problem; when its users aren’t making the final clicks to feed the Facebook machine with the content it needs, it’s Facebook’s lost business.

The paper suggests that Facebook would somehow need to communicate to users that their posts will be well-received based on Facebook’s own News Feed managing dexterity. But neither homogeneity nor well-defined topic interest are related to more sharing, and these are in fact related to less sharing. Not only can users not easily determine how to share their content; based on the ineffectiveness of friend lists or groups, the study makes clear that Facebook can’t neatly clean up self-censorship, either.

The study also leaves out what is likely an important factor in self-censorship: what users decide not to share. The study indicates that Facebook can easily track the whens and hows of even the information users don’t give it, based on what’s collected in this study. The content of that information would be a possible next step. Facebook did not return requests for comment on whether the self-censorship instances are always curated for all users or if it was data collected specifically for the study.

Facebook seems to be placing increasingly heavy weight on the roads not taken: the site also recently indicated that it would start tracking minute non-interactions like mouse movements over its webpages. As Slate points out, even information not explicitly submitted to Facebook, including passive actions like typing in a box, is covered as collectible by the data use policy. Users who are wary of being tracked, be warned: services like Facebook can see what you start, in addition to what you finish.

reader comments

82