The Broadcom SOC in the Raspberry Pi is actually surprisingly powerful, it turns out. It’s actually capable of driving a VGA monitor through the GPIO pins using a handful of resistors.

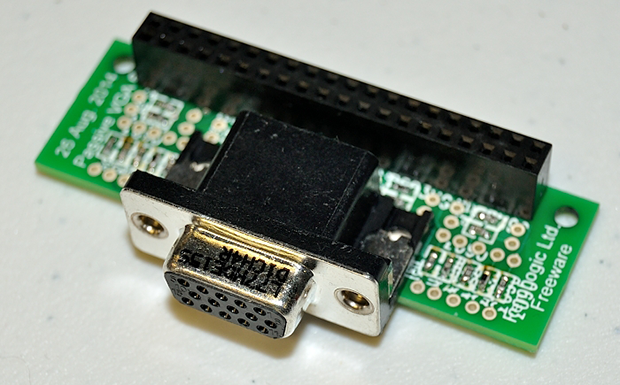

[Gert van Loo], Raspberry Pi chip architect, wizard, and creator of a number of interesting expansion boards showed off a VGA adapter for the new B+ model at the recent Raspberry Pi Jam in Cambridge this week. Apparently, there is a parallel interface on the SoC that can be used to drive VGA with hardware using a resistor ladder DAC. That’s native VGA at 1080p at 60 fps in addition to HDMI for the Raspberry Pi. Only the new Model B+ has enough pins to do this, but it’s an intriguing little board.

The prospect of having two displays for a Raspberry Pi is very interesting, and the remaining four GPIOs available mean a touch screen could be added to one display, effectively making a gigantic Nintendo DS. Of course there are more practical problems a dual display Raspi solves, like driving a projector for the current crop of DSP/resin 3D printers, while still allowing for a usable interface during a print.

The VGA expansion board, “is likely to have issues with EMC,” which means this probably won’t be a product. Getting a PCB made and soldering SMD resistors isn’t that hard, though, and we’ll post an update when the board files are released.

Thanks [Uhrheber] for sending this one in.

Probably he used the Secondary Memory Interface on the BCM chip.

No. Here is the document on github: https://raw.githubusercontent.com/fenlogic/vga666/master/documents/vga_manual.pdf

Great news I thought – just until I realized that’s another thing feasible only on the B+. Sometimes I’d really wish Raspi had VGA output instead of HDMI, even with its huge connector. All those legacy monitors out there, waiting to be driven!

You can get a HDMI to VGA converter for the Pi for around £7 from Amazon that works with any model Pi

It’s about VGA & HDMI Simultaneously

i have absolutely no idea what “the right pins” means in the article above, but from what i understand, the main reason it uses all but four of th epins of the b+ is for a giant r2r dac.

couldn’t this have been done by using some sort of external DAC for output, while still using the capabilites of the chip to render to 2 displays?

wouldt that leave more pins up for grabs?

Given that he knew enough to exploit an interface no-one had touched before like this, my guess is he considered it and decided against it.

VGA is clocked at ~25 MHz, so it should be doable. That said, any kind of muxing logic would add significant CPU load.

My guess is it would make more sense to do all the graphics stuff on the RasPi, and use the 4 pins to connect everything else via I2C, etc. Alternatively, you could just use the right device for the right job…

At 640×480, it is clocked at 25 MHz.

At 1920×1080, it is 183 MHz.

I asked myself nearly the same thing. But it is/was pretty common to use 8 or 10bit parallel DACs in VGA interfaces. We could use one here, (like the ADV7123), and in fact people have used such DACs to bring VGA to microcontrollers or FPGAs.

I think there were VGA DACs in the past, that had multiplexed data pins, but I may be wrong. Anyways, the source has to support this, and it’s not clear if the Raspi does.

But other things would be nice to know:

– Does the interface support the right timing to drive analog RGB video monitors (original MAME cabinets, remember)?

– Can the parallel interface also be an input, sampling several channels simultaneously at high speeds (the Beaglebone can do it, using it’s PRUs, making it a fast logic analyzer for Sigrok)?

– Can the parallel interface be used to generate fast signals with precise timings (i.e. driving several chains of WS2811 LEDs for ArtNet. Again, the Beaglebone can do this.)?

The Raspberry Pi is too underpowered to be usable for a MAME setup and more to the point the Raspberry Pi MAME port (at least the ones I can find) are based on ancient builds of MAME (up to a decade old in some cases) with who knows how many bugs or emulation issues that have been fixed in the time since those builds have been released.

Why people persist in using ancient builds of MAME is beyond me. I bet the current 0.153 Linux build of MAME (with the OpenGL stuff ported to the Pi OpenGL ES interface) would probably work just fine on the thing.

You need to dig into the MAME release notes to understand the why of it.

A couple of points… (these are all my recollection which is probably imperfect :) )

Earlier versions of MAME tended to use simplified, albeit inaccurate, routines for some IC’s. This is especially true if the IC is a custom logic chip that has not been reversed engineered yet. Once the IC has been RE’ed and better understood, the resulting new code could be more complex. As you know, more complex routines takes better hardware to process in the same amount of time.

Earlier versions of MAME often used fixed bitmaps, such as the the starfield in Galaga, rather than rendering them properly. Once those routines are figured, that’s more work for the emulator to do.

A ton of the early games in earlier versions were sampled audio rather than rendered. Same as the fixed bitmaps, those were taken of in later versions of MAME as well.

I could be wrong on this last point since I haven’t looked at the latest versions but OpenGL in MAME isn’t used in the actual emulation but rather for final rendering of special effects, eg scan lines, the monitor bezel, whatever. In other words, OpenGL isn’t going to boost your emulation any and isn’t going to help the Pi overcome the increased processing requirements in later versions of MAME. I believe this last bit is a point of contention between some of the developers and the consuming masses as the developers have no intention of leveraging native hardware even if the PC running the emulator has said hardware.

Dunno, maybe polygons would come in handy emulating resizeable sprite hardware. As in Neo-Geo, and Sega’s Super Scaler games like Outrun and After Burner. Modern processors can do the same job in software easily enough, and maybe there’s some quirky way of doing it required to get pixel-perfect accuracy.

Even ordinary non-shrinking sprites can be done as polygons. Like the Sega Saturn, but backwards!

Actually I’m using the Raspberry Pi to rebuild my Centipede cabinet, and I have MAME running on it just fine. I had to get a HDMI to VGA adpater as well as a VGA to RGB board in order to drive the old 15kz monitor. So yes, some of us are interested in seeing if you could bypass two pieces of equipment with just one that’s powered by the Pi alone.

He is probably using a digital LCD interface here (yeah, likely the memory-interface). This basically means, that the SOC can drive it independently of the OS, pushing framebuffer data with a configurable clock towards the digital pin.

You can most likely free up pins by reducing the bits you allocate to the DAC-Resistor ladder and mux the least significant bit pins to a different purpose.

Wen using an external dac that gets fed by some sort of bus would need driver support and it probably is nontrivial as soon as accelerated graphics etc. come into play.

Bye,

Simon

Exactly what I was going to post. “dumb” TFT and DSTN displays take either interlaced RGB values over a single parallel bus or separate R/G/B parallel buses, plus some sort of H and V sync. They’re all different in some way or another, so most SoCs with a controller that can drive them have flexible data formatting and timings.

Probably not a video DAC as you find them today: they still have as many as 24 or 30 color input pins for 8-bit per component or 10-bit per component respectively. However, if you could get an old RAMDAC off of ebay or a 90’s era video card you might be in business. The old VGA standard had a programmable 8-bit color palette and therefore a much narrower interface to the video chip.

You don’t need to use SMD. The board will take through-hole resistors as well. You’ll want to get slightly more resistors than you need and cherry pick though.

At 1080p, the pixel clock is almost 200MHz. At that speed, through hole resistors start acting a lot more like inductors than SMD resistors do.

1080p on analogue VGA looks bloody AWFUL and fizzes. This is from first-hand experience. It only just works, and I’m sure it’s a good bit past the spec, if there actually is one. Certainly way more pixels than IBM imagined back in 1987 when they chose the plugs and cables.

I’m not denying that that was your experience, but back when analog video was the only option, lots of people got very good results at even higher resolutions (1920×1440, 2048×1536).

Personally, I’ve run 2048×1536 on a used 21″ CRT back when 1920×1200 LCDs were taking over the CAD world and high-quality used CRTs became cheaply available, and I got beautiful results. More recently, I chose to run VGA in preference to HDMI/DVI for a media center running a 1080p display, because I was using a cheap 1080p LCD TV with no way to turn off overscan from any HDMI input — using VGA, though, it was easy to get pixel-for-pixel correctness, and there was absolutely no visible image degradation using a short (0.5m), quality cable.

IMO, the number one culprit for poor quality at high resolutions is cheap cables — not necessarily “cheap” in the sense of corner-cutting to save cost, but a cable properly designed and manufactured for 40 MHz signals just isn’t enough for 200 MHz or so. VGA cables rated for high dot-clocks are both more expensive and thicker (thus, less flexible) than lower-grade ones, and you likely won’t see any difference at all from VGA up to 1024×768-ish modes, so it’s only sane that most VGA cables are the cheaper sort. But if you do want to rock high resolutions, the good cables (and no longer than needed) make all the difference in the world.

Two Screens? you can have 4.

1 color and 3 Black and White if you separate out R,G,B and use a simple resistor setup to squirt the sync into the colors to give you composite B&W for 3 outputs.

This used to be done all the time back in the day. In fact it is exactly how a BattleTech Tesla pod drives all it’s secondary displays.

I remember the days, when all game cabinets had B&W screens, and the “colors” where made with stripes of color foil.

The first breakout games did it that ways. Every row of bricks had a foil in a different color.

Vectrex played on this specifically. Though I’d call it a film rather than foil…

I’m just trying to picture the three images each of 88 (256/3) shades on each monitor and the weird images you would see on a single VGA monitor. I’m picturing full motion video, so three separate and unrelated sources (I’m assuming that all the combining of separate videos into a single RGB pre-processing was done already so that it could run in realtime) would just look mostly like noise ?

sorry 256/3 is 85, i need more 0xc0ffee

Why 256/3? Every color (R, G and B) has 8bits each, meaning you could generate three B&W signals at 256 shades of gray each.

He’s saying split the 256 shades for each monitor into 8-bit color (RRRGGGBB) for each of the 3 “BW” monitors.

Yes I think that was his intention but RGB332 actually gives you 8 shades of red and green and 4 shades of blue, not 88/85.

R is 8 bits and G is 8 bits and B is 8 bits, but with VGA you are limited to having a table of 256 values at one time on screen. At least in the original 320×200 vGA standard, at least on the PC I remember programing it in assembly years ago.

Ok fartface, who are you and what Podbay do you hail from? :P

I’ve replicated a Tesla pod-style triple-monochrome display, was working on a way to interface it with MW4 for a small VWE-like home setup.

Didn’t get much farther than the displays, sadly.

This is actually quite interesting for people who make a MAME Cabinet using a RasPi, the old CRT screens (which mostly dont have HDMI) are more authentic regarding their Display than the TFTs of todays time.

It is but one could always pick up a cheap HDMI to VGA converter for ~$3 to $5 that would probably get the job done. I know when I’m not just SSHing into my PI I use a HDMI to DVI converter so it can use my second monitor.

Actually looking into that the Pi would need a more expensive converter (something with external power) since it has trouble sourcing power. I guess if you were just doing stuff like MAME where having free GPIO didn’t really matter this would be a solid solution :)

If the Pi can’t source enough power, I’m sure just cutting the lead open and feeding power to the right wire from somewhere else will do. Or find somewhere on the converter you can feed the power into. Or make an adaptor, a thru-socket with separate power input. Etc.

My Raspberry Pi (model B revision 1) works fine with an HDMI to VGA converter, without external power supply. The converter has power problems when used with a BeagleBoard (rev C3), but on a Pi, it works perfectly fine.

If you’re going to do this, don’t send it as tip to hackaday, and just keep it to yourself. Raspberry Pi(not “raspi”) has had enough advertisement of playing mame with lag and ear-scraping audio, so much for authenticity, and because of that, it is not interesting.

Nice blog you got there.

I will agree with you that retropie is done to death. However, the first person to use this as a dual-screen resin printer controller is going to have a $40k Kickstarter staring them in the face. Add in the printer, and that might be a million dollar kickstarter idea.

Better would be to abuse the interface to drive a laser scanner.

With separate R/G/B parallel buses you can allocate more bits to the XY DACs and leave one left over for laser control. Set the dot clock appropriately slow and set the blanking intervals to zero. Then write a utility to turn a vector path into framebuffer data, double-buffer the framebuffer memory, and swap frames on vsync. Finally crack open a beer and celebrate not needing an FPGA or other unnecessary control hardware.

Or use rotating mirrors and (somehow) phase lock the motor to the SYNC pulses for XY raster scanning, and use one of the color channels for laser control.

And the CRT screens were spherical, a feature that is only now available again with TFTs.

That’s the wrong spherical though, isn’t it?

Concave vs convex?

Don’t think anyone ever made a concave CRT.

Actually, the original Sony Watchman (FD-210) had a concave phosphor screen in the black & white CRT it used:

http://www.guenthoer.de/bilder/sony-flat.jpg

The CRT was unique, in that the phosphor screen was tilted almost flat, and on the interior of the tube (instead of being viewed from the outside). While maybe not as concave as you might imagine, it was concave to a small degree.

This probably works the same way as VGA on the ARM Cortex M3 DUE.

Just hope they use DMA for the pixel shoveling.

another stupid hardcoded binary BLOB from Broadcom

So it turns out raspee b+ is capable of driving LCD screens natively (only one $1 LVDS driver chip needed), but all we get is binary blob. Will we be able to change timings? All i can see is pin definition and blob in the github repo. If we are lucky it is sharing clock with HDMI and it could be possible to coerce it into driving some LCD, knowing raspee foundation it will be hardcoded to one resolution.

Fast parallel output bus could be used for so many hacks (just like an open MIPI driver), but Broadcom just cant let go.

Seriously. I don’t understand this. WHY would a company want to keep documentation for the chips they sell private? Right now, I’m using DMA to control 4 stepper motors with approximately 500k writes per second. If the documentation for this VGA interface was public, I could quite likely repurpose the peripheral to get 10M+ writes per second and make a superior product to share with others, leading to increased sales for Broadcom.

Broadcom, I don’t understand you.

And now I want a PCB to drive $10 TFT panels.

Exactly what I was thinking. So many decent sized TFTs for cheap out there.